How Notion develops world-class AI features

With Simon Last, Co-Founder

Notion, a connected workspace for documents, knowledge bases, and project management systems, is the go-to platform for millions of users, from agile startups to global enterprises. Notion’s commitment to customer experience has always set them apart — but with the rise of generative AI, they saw an opportunity to push their platform even further.

Co-founders Ivan Zhao and Simon Last were early in recognizing the potential of generative AI and started experimenting with large language models (LLMs) soon after GPT-2 launched in 2019. Given that context, it’s no surprise that Notion was one of the first teams to integrate GPT-4 into their product:

- In November 2022, they introduced Notion AI, a writing assistant (2 weeks before ChatGPT was released)

- In June 2023, they introduced AI Autofill, a tool to generate summaries and run custom prompts across an entire workspace

- And in November 2023, they introduced Notion Q&A for chatting with your entire Notion workspace

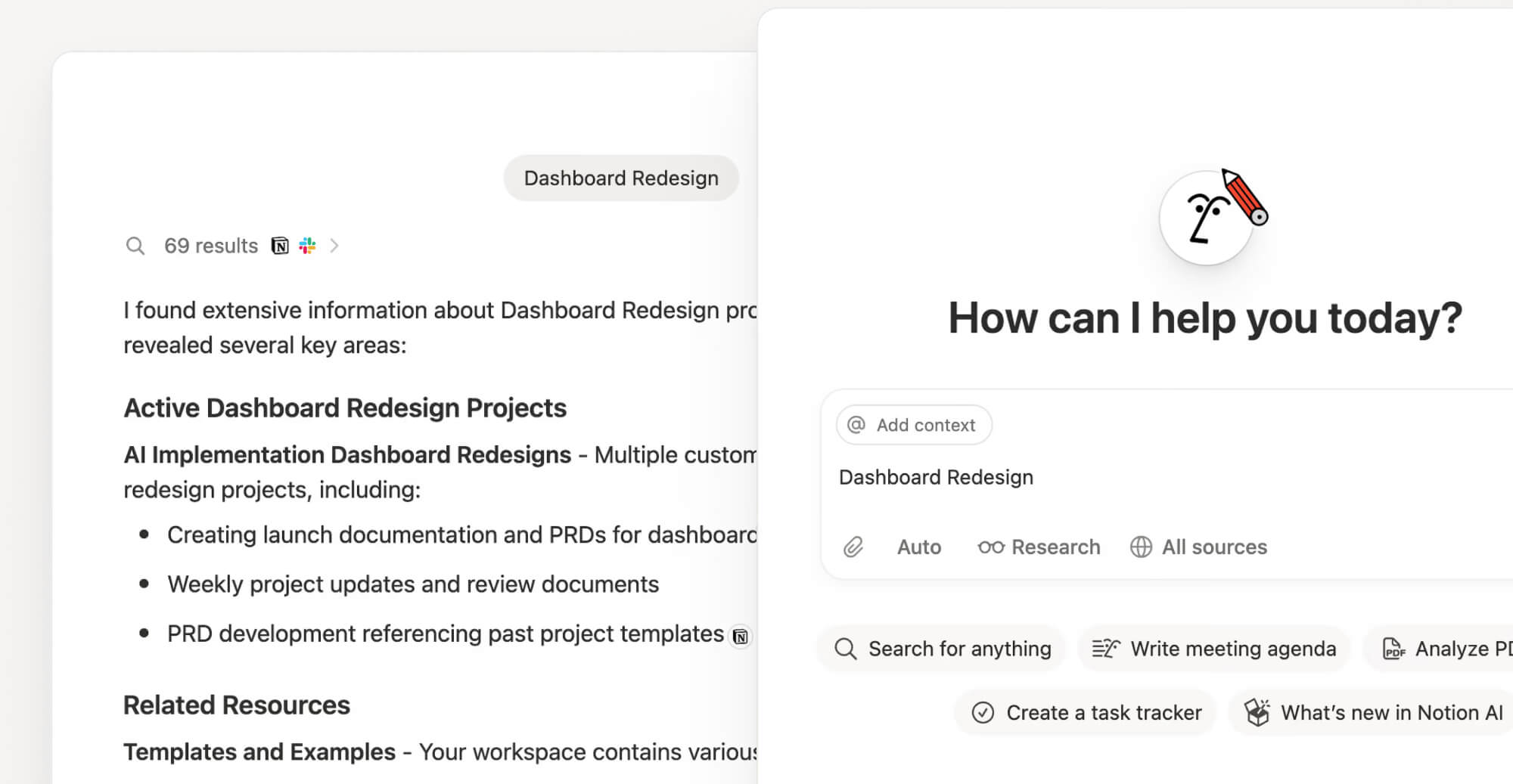

Today, those features (and much more!) make up Notion AI, which powers four essential capabilities: searching workspaces, generating and editing tailored documents, analyzing PDFs and images, and answering questions using information from both your workspace and the web. However, as the team rolled out these features, they realized their existing workflows for evaluating AI products weren’t up to the challenge.

In this case study, we’ll explore how the team at Notion evolved their evaluation workflow— dramatically improving their AI development speed and accuracy— by partnering with Braintrust.

Evaluating Notion Q&A

One of Notion’s earliest AI innovations was its Q&A feature. With Q&A, users could ask complex questions, and AI would pull data directly from their Notion pages to provide insightful responses. It was a magical customer experience — despite the broad and unstructured input and output space, it almost always returned a helpful response.

Behind this magic was an immense engineering effort. Notion’s AI team did a ton of work to ensure Q&A could understand diverse user queries and generate a helpful output. However, the complexity of the product meant that their evaluation process— how they tested and improved the AI’s performance— needed an upgrade.

Initially, Notion’s evaluation workflow was manual and intensive:

- Large and diverse datasets were stored as

JSONLfiles in a git repo, making them difficult to manage, version, and collaborate on. - Human evaluators scored individual responses, resulting in an expensive and time-consuming feedback loop.

While this process helped Notion successfully launch Q&A in beta in late 2023, it was clear they needed a more scalable, efficient approach to take their AI products to the next level. That’s where Braintrust came in.

Notion’s evals today

Partnering with Braintrust transformed Notion’s eval workflow, enabling faster iterations and ultimately higher quality features in production. Here’s an overview of the new workflow:

1. Decide on an improvement

The first step is to decide on an improvement. It could be adding a new feature, fixing an issue based on user feedback, or enhancing existing capabilities. In the newest Notion AI product, users can ask questions and chat about anything — from the work context that lives in their Notion pages, to unrelated ideas and concepts across a wide variety of topics, powered by LLMs.

2. Curate targeted datasets

Instead of manually creating JSONL files, Notion now leverages Braintrust to curate, version, and manage datasets seamlessly. They typically start with 10-20 examples. To curate their datasets, Notion uses examples from real-world usage that are automatically logged in Braintrust, and writes examples by hand. To give you a sense of how important this step is, Notion has hundreds of eval datasets - and the number grows every week.

3. Tie these datasets to specific scoring functions

Notion’s approach is to clearly, often narrowly, define the scope of each dataset and scoring function. For more complex tasks, Notion will use a variety of well-defined evals, each testing for specific criteria. Typically, this is a mix of heuristic and LLM-as-a-judge scorers, along with a healthy dose of human review. Because Braintrust allows users to define their own custom scoring functions, Notion can flexibly test anything — tool usage, factual accuracy, hallucinations, recall, and more.

4. Run evals & inspect results

After making an update, Notion leverages Braintrust to immediately understand how performance changed overall and drill down into specific improvements and regressions. The team follows this rough process:

- Hone in on specific scorers & test cases to determine if the update led to the targeted improvement

- Inspect all scores holistically to ensure there were no unintended regressions

- Dig into failures and regressions (typically indicated by low scores) to understand where the application is still failing

- Diff outputs from multiple experiments side-by-side

- Optional: curate additional test examples to add to datasets

5. Iterate quickly

The team continues the cycle of make an update -> run evals and inspect results until they’re happy with the improvements and are ready to ship — on to the next!

The results

The team at Notion is now able to triage and fix 30 issues per day, compared to just 3 per day using the old workflow— allowing them to deliver even better products, like their new suite of incredible AI features. By redefining their workflow, Notion has set a new standard for how AI product teams can evaluate and improve their generative AI products.

Conclusion

Generative AI is redefining what’s possible in software, and it’s been inspiring to watch Notion leverage AI to push the boundaries of excellent user experience. With Braintrust, Notion has created an evaluation workflow that empowers its AI team to ship faster and build with confidence.

Build AI evaluation that scales

Learn how Braintrust enables rapid iteration, clear visibility into regressions, and the confidence to ship world-class AI features faster.