7 best AI observability platforms for LLMs in 2025

Quick comparison of the best AI observability platforms for LLMs:

- Best overall (improvement loop): Braintrust

- Best open source: Langfuse

- Best for LangChain: LangSmith

- Best for ML + compliance: Fiddler

The question has changed. A year ago, teams building with LLMs asked "Is my AI working?" Now they're asking "Is my AI working well?"

When you're running a chatbot that handles 50,000 conversations a day, "it returned a response" isn't good enough. You need to know which responses helped users, which ones hallucinated, and whether that prompt change you shipped on Tuesday made things better or worse. Traditional monitoring tools track metrics like uptime and latency, but they don't review and score live answers from AI agents.

This is where AI observability comes in. The teams winning aren't just shipping AI features; they're building feedback loops that make those features better every week. The right AI observability platform is the difference between flying blind and having a system that improves itself.

What is AI observability?

AI observability monitors the traces and logs of your AI systems to tell you how they are behaving in production. Contrary to traditional software observability, AI observability goes beyond uptime monitoring to answer harder questions: Was this output good? Why did it fail? How do I prevent it from failing again?

The line between "logging tool" and "observability platform" comes down to what happens after you capture data. Basic logging stores your prompts and responses. Maybe you get a dashboard showing request volume and error rates. That's useful for the first week, but it stops being useful when you have 100,000 logs and no way to know how your AI systems are performing.

A modern AI observability platform goes beyond passive monitoring by tightly integrating debugging, evaluation, and remediation into the development lifecycle. Production logs are correlated with traces and model inputs, which feed directly into automated evaluations running in CI/CD. When regressions or failures are detected, those cases are automatically captured as reusable test datasets, turning real-world incidents into guardrails for future releases. Rather than simply explaining what happened, the platform closes the loop by helping teams fix issues and continuously verify that the fix holds in production.

The 7 best AI observability platforms in 2025

1. Braintrust

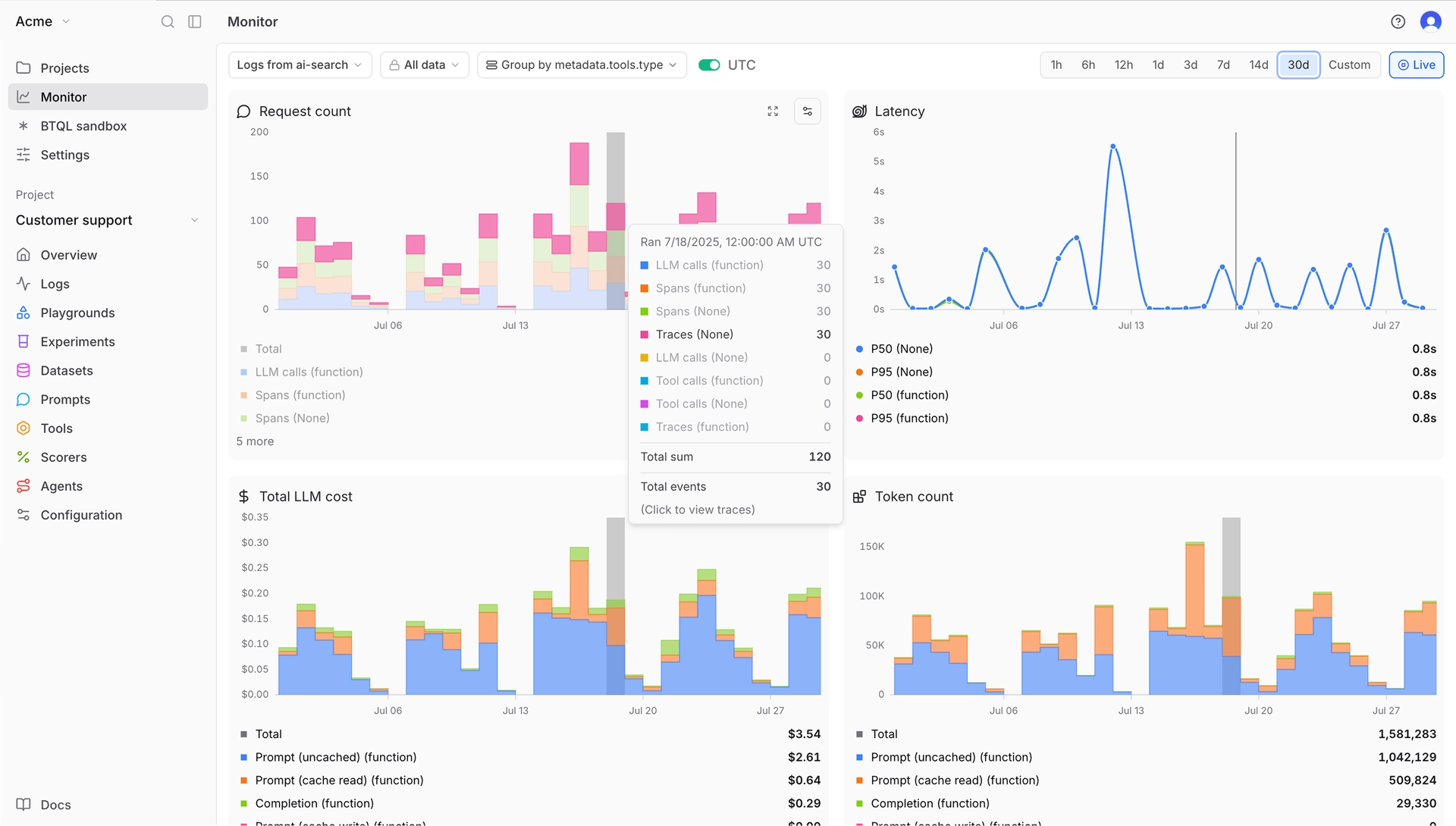

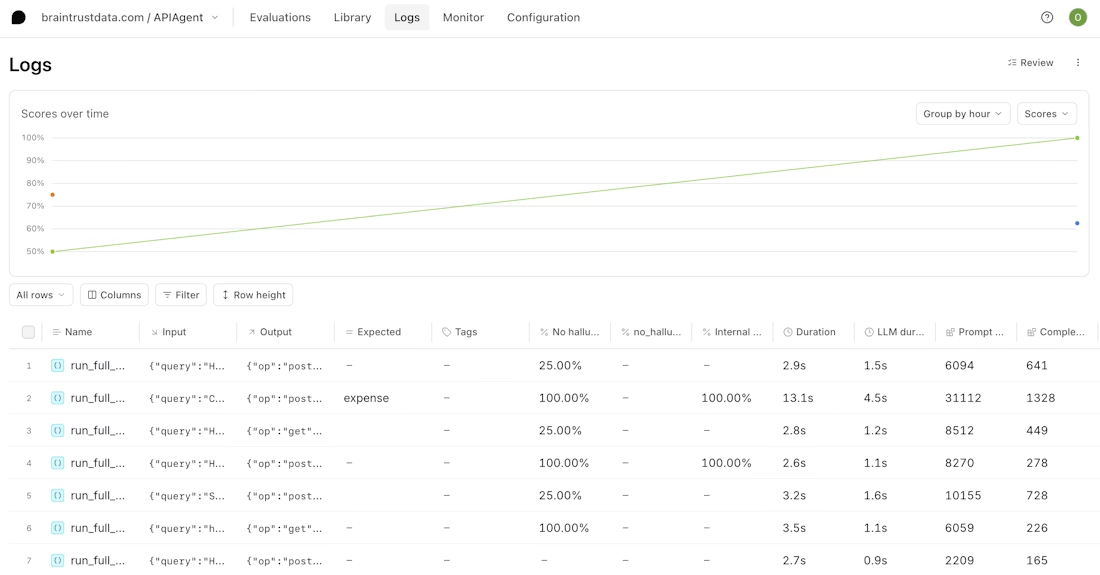

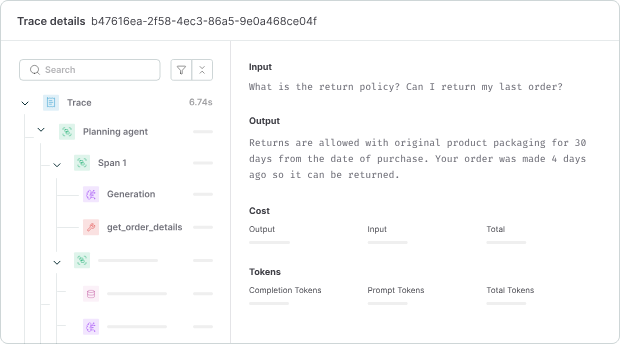

Braintrust is an end-to-end platform that connects observability directly to systematic improvement. Production traces become eval cases with one click, eval results show up on every pull request, and PMs and engineers work in the same interface without handoffs.

Braintrust is opinionated about workflows in a way that saves time. Get instant AI observability by sending logs to Braintrust. Key metrics are automatically tracked on each log with the ability to configure custom metrics and scorers as well.

Companies like Notion, Zapier, Stripe, and Vercel use Braintrust in production. Notion reported going from fixing 3 issues per day to 30 after adopting the platform.

Best for: Teams shipping AI products to real users who need to catch regressions before they hit production, not just monitor what already happened.

Pros:

- Exhaustive trace logging out of the box: Every trace captures key metrics automatically: duration, LLM duration, time to first token, LLM calls, tool calls, errors (broken down by LLM errors vs. tool errors), prompt tokens, cached tokens, completion tokens, reasoning tokens, estimated cost, and more. No manual instrumentation required.

- Fast load speeds and low latency: Filter, search, and analyze thousands of production traces in seconds. The platform runs on Brainstore, a database purpose-built for AI workloads.

- Online and offline scorers: Run evals against live traffic or test datasets. Scorers are easy to configure: use LLM-as-judge, custom scorers, or deterministic checks. The native GitHub Action posts eval results directly to your pull requests.

- Simple data model: Datasets hold your test cases. Tasks define what you're testing. Scorers measure quality. That's it.

- Great UX for devs and product teams: Engineers write code-based tests using the Python or TypeScript SDK. PMs prototype prompts in the playground with real data. Everyone reviews results together.

- Hosted SaaS: No infrastructure to provision. Sign up, add the SDK, start tracing in minutes.

- AI proxy: The AI proxy gives you a single OpenAI-compatible API for models from OpenAI, Anthropic, Google, and others. Every call gets traced and cached automatically.

- Generous free tier: 1M trace spans, 10k scores, unlimited users.

Cons:

- Self-hosting requires an enterprise plan

- Pro tier ($249/month) may be steep for solo developers or very early-stage teams

Pricing: Free (1M spans, 10k scores, 14-day retention), Pro $249/month (unlimited spans, 5GB data, 1-month retention), Enterprise custom. See pricing details →

2. Langfuse

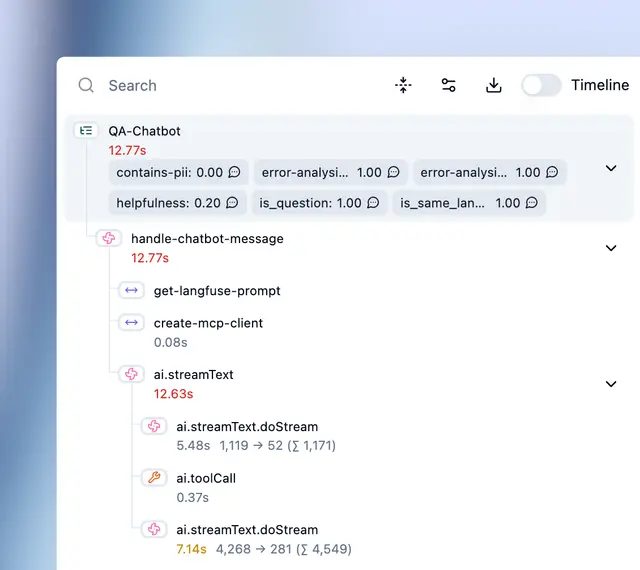

Langfuse is the open-source option in LLM observability. The platform covers tracing, prompt management, and evaluations with multi-turn conversation support.

Best for: Teams who want open-source flexibility, especially those comfortable self-hosting.

Pros:

- Fully open-source under MIT license. Self-host without restrictions.

- OpenTelemetry support for piping traces into existing infrastructure

- Active community and frequent releases

- Cost tracking with automatic token counting

Cons:

- UI is functional but less polished than commercial alternatives

- CI/CD integration requires custom work

- Self-hosting needs DevOps knowledge to set up properly

Pricing: Free cloud tier (50k observations/month), Pro starts at $59/month, self-hosted is free.

3. LangSmith

LangSmith comes from the LangChain team. If you're building with LangChain or LangGraph, setup is a single environment variable. The platform understands LangChain's internals and surfaces them in debugging views that make sense for that ecosystem.

Best for: Teams already using LangChain or LangGraph who want native integration.

Pros:

- Native LangChain integration. Set one environment variable and tracing works.

- Strong debugging for agent workflows with step-by-step visibility

- Evaluation tools support automated testing and LLM-as-judge

- Live monitoring dashboards with alerting

- OpenTelemetry support added in 2025

Cons:

- Best experience requires LangChain. Less compelling if you're using other frameworks.

- Per-seat pricing gets expensive as headcount scales

- Self-hosting is enterprise-only

Pricing: Developer free (5k traces/month), Plus $39/user/month (10k traces/month included), Enterprise custom.

4. Helicone

![]()

Helicone is an AI Gateway with routing, failovers, rate limiting, and caching across 100+ models in addition to an evals platform.

Best for: Teams who want gateway features in addition to evals.

Pros:

- Built-in caching reduces LLM costs on duplicate requests

- AI Gateway routes to 100+ models with automatic failovers

- Session tracing for multi-step workflows

Cons:

- Less depth on evaluation features. This is observability and gateway, not evals.

- More focused on operational metrics than quality improvement workflows

Pricing: Free (10k requests/month), $20/seat/month plus usage-based pricing.

Check out our guide comparing Helicone and Braintrust

5. Maxim AI

Maxim AI combines simulation, evaluation, and observability in their platform. The standout feature is agent simulation: test your AI across thousands of scenarios with different user personas before you ship.

Best for: Teams who want to come up with AI-generated test cases.

Pros:

- Agent simulation engine tests workflows across varied scenarios and user personas

- LLM gateway in the app

- Pre-built evaluator library for common quality checks

- SOC 2 Type 2 compliant with in-VPC deployment option

Cons:

- Newer platform with smaller community. Fewer third-party resources.

- Some features like the no-code agent IDE are still in alpha

Pricing: Free tier available, Pro $29/seat/month, Business $49/seat/month, Enterprise custom.

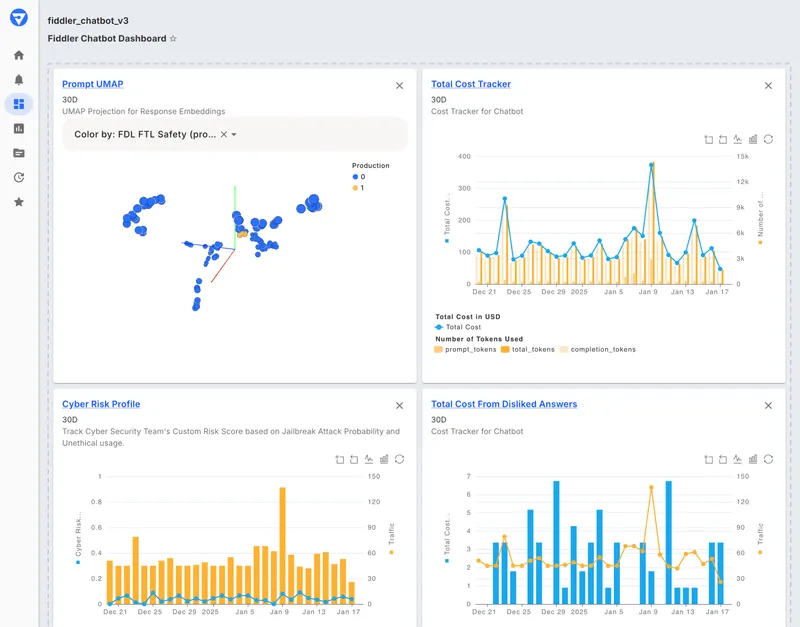

6. Fiddler AI

Fiddler AI tracks both traditional ML and LLMs. If you're running recommendation models, fraud detection, and a customer service chatbot, you can monitor all of them in one place. The focus is enterprise: explainability, compliance, security.

Best for: Enterprises running both ML and LLM workloads who need explainability and regulatory compliance.

Pros:

- Unified observability for predictive ML and generative AI in one dashboard

- Explainable AI features including Shapley values and feature importance

- Drift detection and data quality monitoring

- Root cause analysis and segment analysis tools

- VPC deployment, SOC 2, and support for regulated industries

Cons:

- Enterprise pricing. You'll need to talk to sales.

- Steeper learning curve given the breadth of features

- More suited for organizations with dedicated ML platform teams

Pricing: Contact sales. Enterprise-focused with custom pricing.

7. Evidently AI

Evidently AI is an open-source library with over 20 million downloads and 100+ built-in metrics. If you're coming from a traditional ML background and adding LLMs, the mental model will feel familiar. Evidently also offers hosted SaaS for their open source tooling.

Best for: Teams running both traditional ML and LLM workloads who want unified monitoring.

Pros:

- 100+ pre-built metrics for data quality, model performance, and drift detection

- Open-source with permissive license

- Strong data drift detection

- LLM evaluation support with tracing and no-code workflows in cloud version

Cons:

- Less emphasis on production-to-improvement loops

- Best features are in the cloud version; open-source is more limited for LLM use cases

Pricing: Free tier (10k rows/month), Pro $50/month, Expert $399/month, Enterprise custom.

Comparison table

| Platform | Starting price | Best for | Standout features |

|---|---|---|---|

| Braintrust | Free (1M spans) | Teams shipping AI products who need evals + observability | CI/CD evals, exhaustive auto-captured metrics, fast queries, PM playground |

| Langfuse | Free / Self-host | Open-source enthusiasts, data control | MIT license, OpenTelemetry, 19k+ GitHub stars |

| LangSmith | Free (5k traces) | LangChain/LangGraph users | Native LangChain integration, agent debugging |

| Helicone | Free (10k requests) | Fast setup, gateway features | 1-line integration, caching, AI gateway for 100+ models |

| Maxim AI | Free | Pre-release testing, agent simulation | Simulation engine, Bifrost gateway, no-code UI |

| Fiddler AI | Contact sales | Enterprise ML + LLM + compliance | Explainability, drift detection, regulatory features |

| Evidently AI | Free (10k rows) | ML + LLM unified monitoring | 100+ metrics, data drift, open-source |

Ready to ship AI products with confidence? Start free with Braintrust →

Why Braintrust is the best choice for AI observability

Most observability tools stop at showing what happened. Braintrust is designed to help teams fix it.

With Braintrust, every call to an LLM is logged, including tool calls in agent workflows. You can inspect the full chain of execution, from the initial prompt through downstream actions like retrieval or web search. Each trace captures key metrics by default, AI outputs can be scored against live evaluations, and any production log can be converted into a test case with a single click.

This shortens a process that is usually slow and manual. In many teams, identifying a bad response is only the beginning. Engineers still need to export logs, recreate the scenario, wire up an evaluation, and then remember to check whether the fix actually improved behavior. That work often gets deferred or skipped entirely.

Braintrust removes most of that overhead. A production trace can be added directly to a dataset, evaluated alongside existing cases, and surfaced in CI on the next pull request. Observability, testing, and iteration all happen in the same system, which makes it easier to turn real failures into permanent guardrails.

The underlying infrastructure is built for the size of AI data. LLM traces are significantly larger than traditional application traces, often tens of kilobytes per span, and much more for complex agent runs. Braintrust's storage and query layer is designed for this scale, which keeps searches and filtering responsive even across large volumes of production data.

The workflow is also shared. Engineers and product managers work in the same interface, using the same traces and evaluation results. There's no separate handoff process or custom reporting step. Teams that iterate quickly tend to ship better AI systems, and Braintrust is optimized around that reality.

When Braintrust might not be the right fit

Braintrust isn't trying to be everything to everyone. A few cases where you might look elsewhere:

- If you need fully open-source: Braintrust isn't open-source. If that's a hard requirement for your organization, Langfuse is the strongest option in this space.

- If you're all-in on LangChain: LangSmith's native integration with LangChain and LangGraph is hard to beat if that's your entire stack. Braintrust works with LangChain, but you won't get the same depth of framework-specific tooling.

- If you only need a gateway: Braintrust includes an AI proxy, but if routing and model switching is your only need, a dedicated gateway like OpenRouter can be simpler.

FAQs

What is AI observability?

AI observability is the practice of monitoring, tracing, and analyzing AI systems to understand behavior, detect issues, and improve quality over time. It goes beyond traditional monitoring by evaluating output quality, not just system health. A good observability platform tells you both "the API responded in 200ms" and "the response was helpful and accurate."

How do I choose the right AI observability tool?

Start with your stack and team. If you're using LangChain, LangSmith integrates seamlessly. If you want open-source and data control, Langfuse is strong. If you need evals tied to CI/CD and a unified workflow for PMs and engineers, Braintrust is purpose-built for that. Consider tracing depth, evaluation features, and whether non-engineers need access.

What's the difference between AI observability and traditional APM?

Traditional APM tracks system metrics: latency, error rates, uptime. AI observability adds quality evaluation. Did the response make sense? Was the retrieval relevant? Did the agent use the right tool? The model can return a 200 status code and still produce a useless answer. AI observability catches that.

If I'm already logging with a basic solution, should I switch?

If you can answer these questions confidently, you might be fine: Which prompt version performs best? What percentage of responses are high quality? Which user segments see the most failures? If you can't, dedicated AI observability will give you answers and probably pay for itself in reduced debugging time.

How quickly can I see results with AI observability?

Initial traces flow within 30 minutes of setup for most platforms. Meaningful quality insights take longer, typically 1-2 weeks as you build eval datasets from production data and establish baselines. The ROI compounds over time as your test suite grows from real-world edge cases.

Can I use multiple observability tools together?

Yes. A common pattern is using a gateway tool like Helicone for cost tracking and caching, alongside a platform like Braintrust for evals and quality monitoring. OpenTelemetry support makes this easier since traces export in a standard format that multiple platforms can ingest.

What's the best alternative to LangSmith?

Braintrust offers similar capabilities without requiring LangChain. It's framework-agnostic with native TypeScript support, stronger CI/CD integration, and a unified workspace where PMs and engineers collaborate directly. If you're not locked into the LangChain ecosystem, Braintrust provides more flexibility.