Langfuse alternatives: Top 5 competitors compared (2026)

Braintrust leads for production teams. CI/CD deployment blocking, zero-code proxy, managed infrastructure, 1M free trace spans.

Runner-ups:

- Arize: Open-source and SaaS options, but infrastructure expertise required

- LangSmith - Perfect for LangChain, but expensive per-trace pricing

- Fiddler AI - Enterprise ML/LLM combo, but no free tier

- Helicone - Multi-provider proxy with cost tracking, but no evaluation

Pick Braintrust if you need automated deployment blocking. Pick others for open-source requirements or framework-specific needs.

Why teams look for Langfuse alternatives

Langfuse is a great self-hosted product. It serves solo developers prototyping locally and large enterprises running their own data centers. But when it comes to batteries included tools for optimizing your AI agents, many teams look for more robust products.

Most production teams fall between solo developers and enterprises requiring self-hosting. Hosted SaaS platforms eliminate infrastructure management, letting teams focus on building features instead of tuning databases. These platforms connect evaluation and production monitoring automatically, removing manual correlation work. They integrate directly into CI/CD pipelines with automated quality gates that block deployments when metrics fail, replacing manual log reviews with systematic regression prevention.

Production teams need managed platforms that run without infrastructure, enable collaborative evaluation, and automatically block bad code. This is the main reason teams explore Langfuse alternatives.

Top 5 Langfuse alternatives (2026)

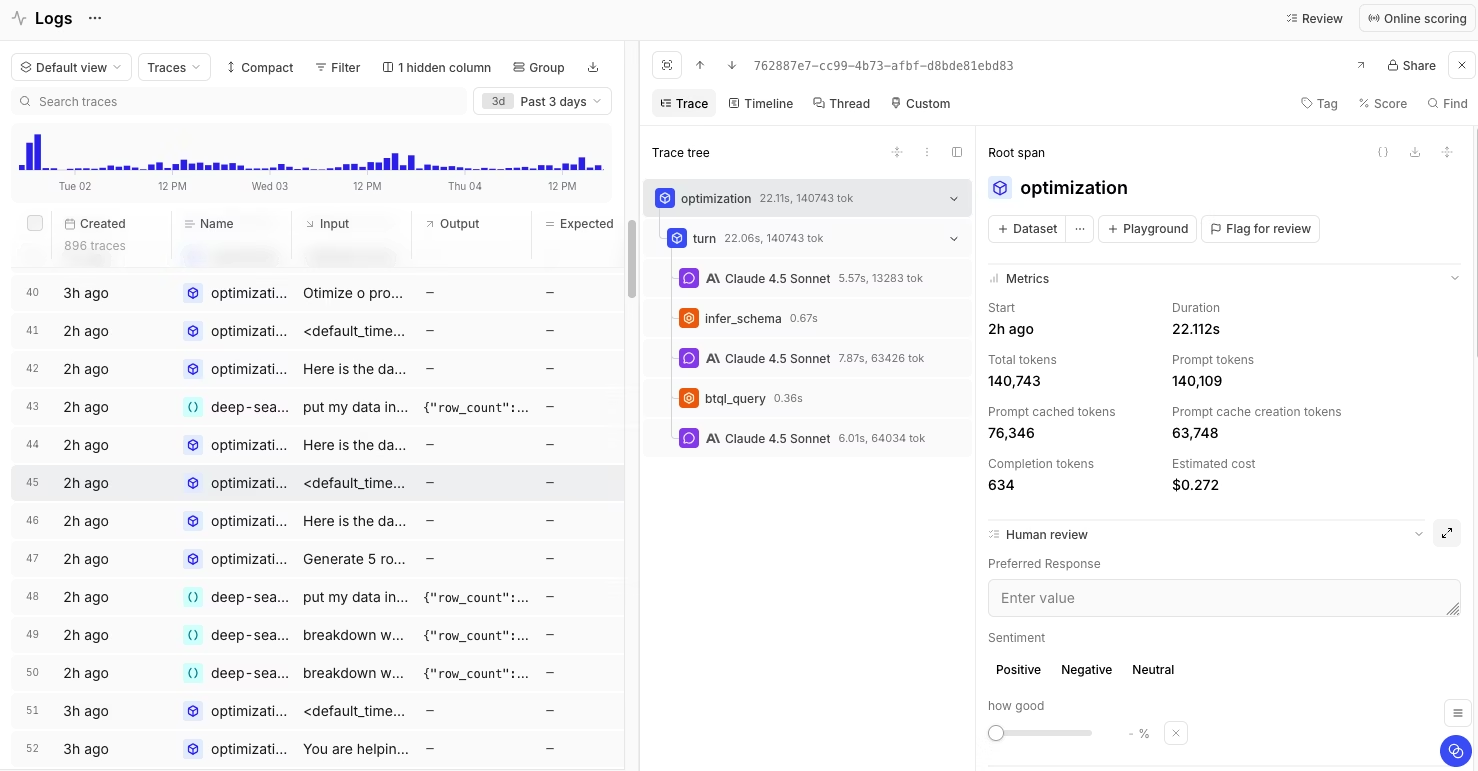

1. Braintrust: Best LLM evaluation platform with CI/CD deployment blocking

Braintrust catches quality issues during code review, not after deployment. When evaluation metrics fail in your CI/CD pipeline, Braintrust blocks the merge automatically. Making sure your team fixes the prompt before it ever reaches production.

Most teams juggle separate tools for experimentation, evaluation, and monitoring. Braintrust puts everything in one place. You test a prompt variation, run it through your evaluation suite, and see how similar patterns behaved in production — all without switching contexts. Production traces automatically become test cases, so failures you fix stay fixed.

The difference shows up in the daily workflow. Instead of exporting traces from one tool, running evals in another, then discussing results in Slack while tracking decisions in spreadsheets, your whole team works in the same interface. Engineers and product managers compare prompt outputs side-by-side and vote on which version ships.

Braintrust key features

- Custom scorers using Autoevals, hallucination detection, factuality checks, Loop AI auto-generates scorers, and statistical significance testing

- Run evaluations locally, in CI/CD pipelines, or on schedules with 10,000 free eval runs monthly

- SDK captures nested calls, AI Proxy logs OpenAI/Anthropic/Cohere with zero code changes, 1M free trace spans monthly

- Trace views show retrieval context and reranking, link traces to dataset examples for debugging

- Version control with rollback, A/B testing with traffic splitting, and an interactive playground across providers

- Import datasets from CSV/JSON/production logs, point-in-time versioning, and team annotation workflows

- GitHub Actions and GitLab CI integration, PR comments with pass/fail status, quality gates block merges

- Cloud-hosted with SOC 2 or self-hosted (Enterprise), multi-provider support

Pros

- Automatic merge blocking when quality drops. Langfuse requires manual review before merging changes.

- No infrastructure to manage. Langfuse self-hosting needs PostgreSQL, ClickHouse, Redis, and Kubernetes.

- Evaluation automatically links to production traces. Langfuse runs experiments separately from live monitoring.

- Larger free tier. 1M trace spans and unlimited users versus 50K units for two users on Langfuse Cloud.

- 30-minute setup. Langfuse production deployment requires significant time.

Cons

- Self-hosting is available only on the Enterprise tier

- Closed-source, unlike Langfuse's MIT license

Pricing

Free tier includes 1M trace spans per month, unlimited users, and 10,000 evaluation runs. Pro plan starts at $249/month. Custom enterprise plans available.

Best for

Teams building production LLM applications who need CI/CD deployment blocking, automated evaluation workflows, collaborative prompt experimentation, and integrated observability without framework lock-in.

Why teams choose Braintrust over Langfuse

| Feature | Braintrust | Langfuse |

|---|---|---|

| Deployment blocking | Automatic merge prevention on failures | Manual review required |

| Infrastructure | Fully managed platform | Self-host PostgreSQL, ClickHouse, Redis, S3 |

| Proxy mode | Zero-code traffic capture | SDK in all services |

| Eval-trace integration | Automatic linking | Manual correlation |

| Production setup | 30 minutes | Kubernetes deployment |

| Scorer generation | AI-powered | Manual creation |

| Free tier | 1M spans/month, unlimited users | 50K units/month, two users |

Engineering teams at Perplexity, Airtable, and Replit use Braintrust's automated blocking to stop prompt regressions during pull request reviews rather than debugging customer-reported issues post-deployment. Start with Braintrust's free tier →

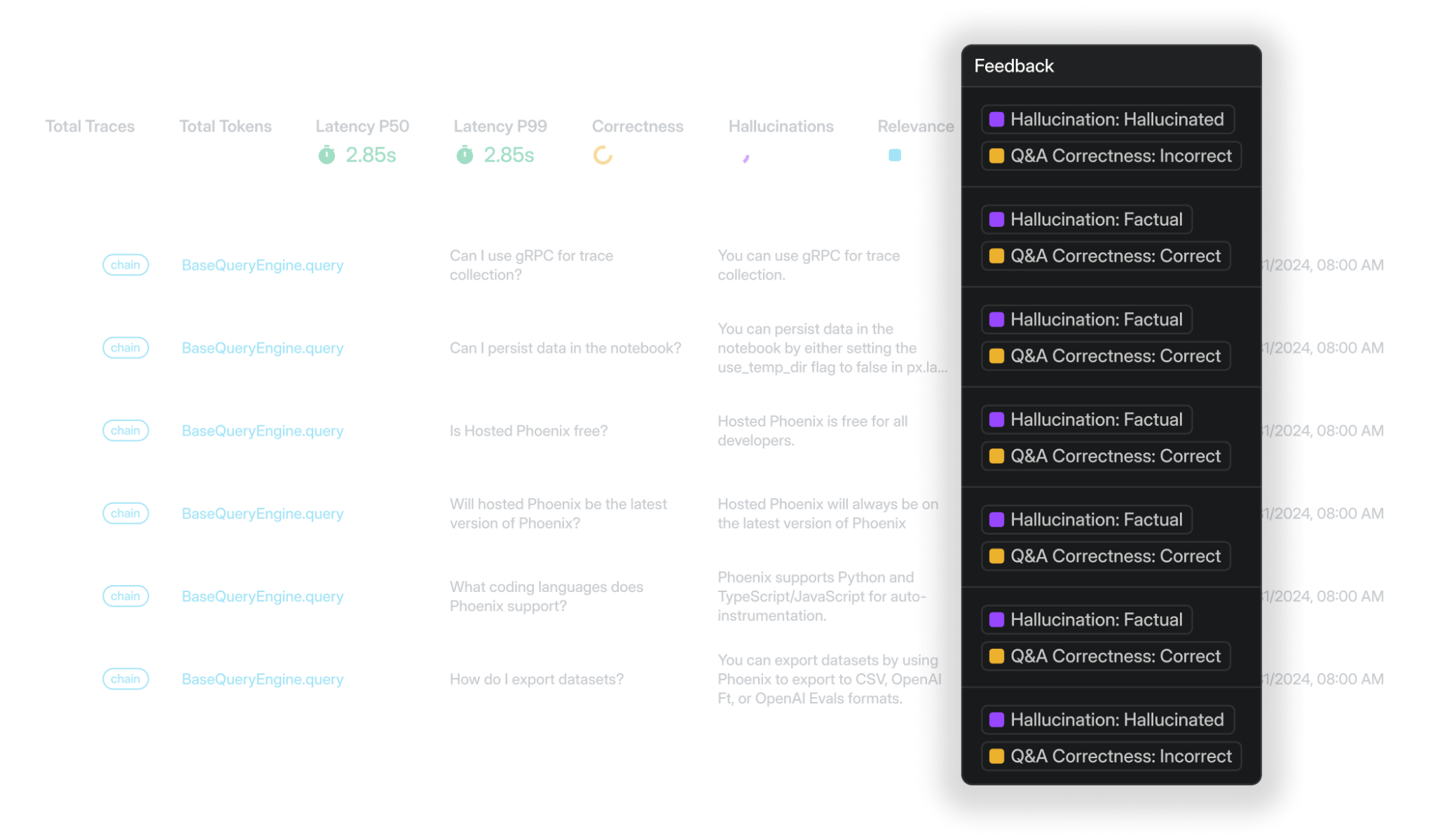

2. Arize: Open-source and enterprise LLM observability

Arize offers Phoenix (open-source) and Arize AX (SaaS). Phoenix provides self-hosted LLM observability with no licensing costs. Arize AX adds enterprise support and traditional ML monitoring capabilities.

Pros

- Open-source option with free self-hosting

- Framework integrations for LlamaIndex, LangChain, DSPy applications

- Dataset versioning and experiment tracking

- Agent graph visualization for multi-step workflows

Cons

- Self-hosting Phoenix requires Docker and Kubernetes expertise similar to Langfuse

- No automatic deployment blocking in CI/CD pipelines

- Evaluation workflows need custom scripts

- Every service requires SDK instrumentation, no zero-code proxy

- Free tier limits to 25K spans and 1 user, compared to Braintrust's 1M spans for unlimited users

Pricing

Free for open-source self-hosting. Managed cloud at $50/month. Custom enterprise pricing.

Best for

Teams managing self-hosted services on Docker or Kubernetes who want open-source LLM observability.

Read our guide on Arize Phoenix vs. Braintrust.

3. LangSmith: LangChain-integrated observability and evaluation

LangSmith ships directly from the LangChain maintainers as their official observability solution. The platform traces LangChain and LangGraph applications through automatic instrumentation, targeting teams already committed to the LangChain ecosystem.

Pros

- Single environment variable enables complete tracing for LangChain applications

- Evaluation framework includes LLM-as-a-Judge and custom scoring functions

- Dataset versioning with side-by-side experiment comparison

- Prompt management with complete iteration history

- Human feedback collection through annotation interfaces

Cons

- Deep LangChain integration makes it harder for teams using other frameworks or raw provider APIs

- Usage-based pricing grows with trace volume rather than delivered value

- Proxy mode doesn't exist; it requires framework or SDK integration in every service

- Self-hosting is available only in the Enterprise tier; smaller teams with data residency needs pay for cloud

- Evaluation results appear in dashboards but don't automatically prevent deployments when quality drops

Pricing

Free tier with 5K traces monthly for one user. Paid plan at $39/user/month. Custom enterprise pricing with self-hosting.

Best for

Teams building exclusively on LangChain and LangGraph who need zero-config tracing and accept vendor lock-in for deep framework integration.

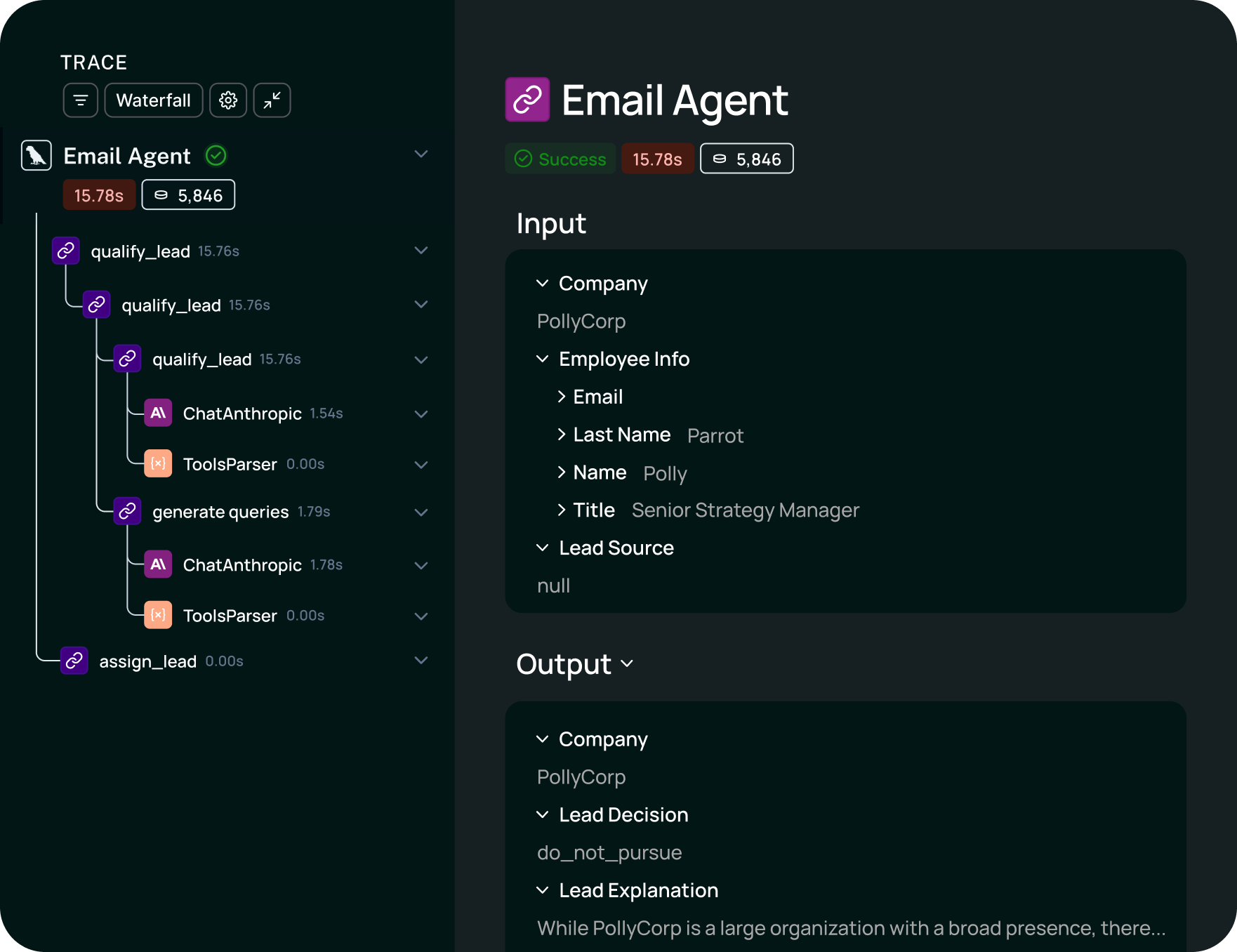

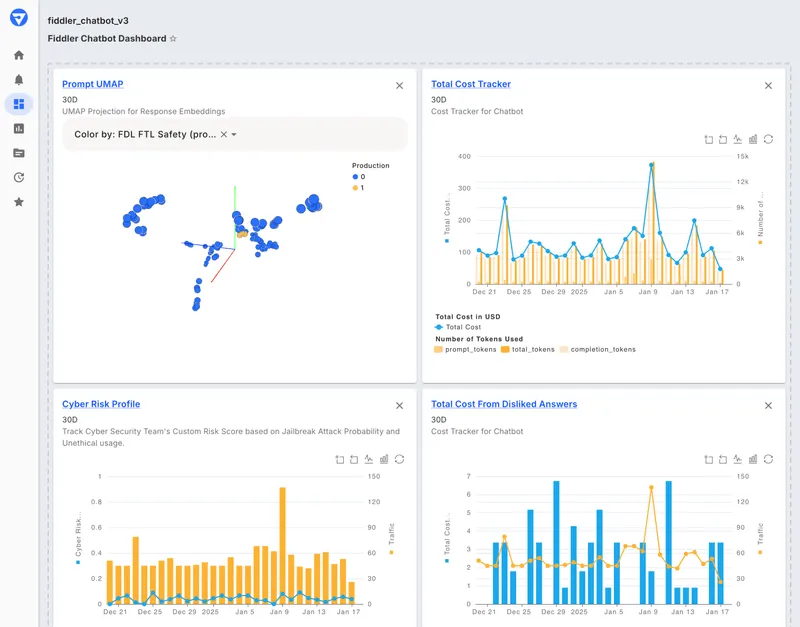

4. Fiddler AI: Enterprise ML and LLM monitoring platform

Fiddler AI built its platform for classical machine learning monitoring, then extended capabilities into generative AI observability. The product targets enterprises running both traditional ML models and LLM applications that want unified monitoring.

Pros

- Monitors traditional ML models and LLM applications in one dashboard

- Embedding drift detection tracks distribution shifts in real-time

- Built-in hallucination detection with configurable safety rules

- Root cause analysis surfaces performance degradation sources

- Threshold-based alerting for quality metrics

Cons

- Interface design originates from tabular ML workflows, making generative AI patterns less natural

- Monitoring and alerting happen after deployment, with no automatic quality gates in CI/CD

- Enterprise-only sales process without free tier or self-service trial access

- Traffic capture requires SDK instrumentation, with no zero-code proxy option

- Prompt iteration happens outside the platform, with no version control or A/B testing built in

Pricing

Custom enterprise pricing only.

Best for

Enterprises currently using Fiddler for classical ML model monitoring that need to extend the same platform to cover LLM applications with unified drift detection and explainability across both model types.

5. Helicone: Multi-provider proxy with cost tracking

![]()

Helicone operates as a unified API gateway supporting LLM providers like OpenAI, Anthropic, Google, Cohere, and other providers. The platform provides automatic request logging with a focus on cost tracking and usage analytics.

Pros

- Single gateway supports multiple LLM providers

- Endpoint change enables logging without application code modifications

- Cost breakdowns show spending per user, project, or provider

Cons

- Zero evaluation capabilities, no scoring, no test suites, no quality analysis

- No tools for dataset creation and prompt comparison

- Request logging only, missing nested trace analysis and agent debugging capabilities

- Unlike Braintrust, no automatic deployment blocking in CI/CD pipelines

Pricing

Free tier (10K requests per month). Paid plan starts at $79/month.

Best for

Companies using multiple LLM providers who need unified request logging and per-provider cost tracking through one gateway without evaluation workflows.

Read our guide on Helicone vs. Braintrust.

Langfuse alternative feature comparison

| Feature | Braintrust | Arize Phoenix | LangSmith | Fiddler AI | Helicone |

|---|---|---|---|---|---|

| Distributed tracing | ✅ | ✅ | ✅ | ✅ | ✅ |

| Evaluation framework | ✅ Native | ✅ Templates | ✅ | Partial | ❌ |

| CI/CD integration | ✅ | ✅ Guides | Partial | ❌ | ❌ |

| Deployment blocking | ✅ | ❌ | ❌ | ❌ | ❌ |

| Proxy mode | ✅ | ❌ | ❌ | ❌ | ✅ |

| Open source | ❌ | ✅ Full | ❌ | ❌ | ✅ |

| Self-hosting | ✅ | ✅ Phoenix only | ✅ | ✅ | ✅ |

| Multi-provider | ✅ | ✅ | ✅ | ✅ | ✅ |

| Experiment comparison | ✅ | ✅ | ✅ | Basic | ❌ |

| Custom scorers | ✅ | ✅ | ✅ | ✅ | ❌ |

| A/B testing | ✅ | ❌ | Partial | ❌ | ❌ |

| Agent visualization | ✅ | ✅ Graphs | ✅ | ❌ | ❌ |

| SaaS free tier | ✅ 1M trace spans, unlimited users | ✅ 25K trace spans, one user | ✅ 5K traces | ❌ | ✅ 10K requests |

Choosing the right Langfuse alternative

Choose Braintrust if: You ship LLM features daily and cannot afford prompt regressions reaching production. Automated deployment blocking, zero-code observability, and managed infrastructure mean your team moves faster while your competitors debug customer-reported issues.

Choose Arize Phoenix if: Full open-source code access is non-negotiable, you have platform engineering resources for PostgreSQL and Kubernetes management, or OpenTelemetry standards matter for your stack.

Choose LangSmith if: LangChain or LangGraph powers your entire application architecture, zero-config framework tracing justifies vendor coupling, or per-trace pricing fits your budget at current scale.

Choose Fiddler AI if: Your organization already pays for Fiddler to monitor classical ML models and wants one platform covering both traditional and generative AI with enterprise support contracts.

Choose Helicone if: OpenAI is your only model provider, straightforward cost tracking covers your observability needs, or you want the simplest possible proxy setup without evaluation features.

Why Braintrust is the best Langfuse alternative

Langfuse gives you infrastructure control. Braintrust gives you time back.

The difference matters when you're shipping code daily. Langfuse requires someone on your team who understands database performance, Kubernetes scaling, and orchestration of the evaluation pipeline. That person exists at some companies. At others, that expertise doesn't exist or costs more than a managed platform.

Braintrust changes the equation by preventing problems instead of reporting them. Your CI/CD pipeline runs evaluations and blocks the merge when quality drops. No manual review, no missed regressions, no customer complaints about prompts that should never have shipped. The platform handles infrastructure, proxy logging works without code changes, and production traces feed your test suites automatically.

Companies like Perplexity, Notion, Stripe, and Zapier use Braintrust for preventing deployment issues that matter more than owning the database layer.

Get started free or schedule a demo to see observability and evaluation working together without infrastructure requirements.

Frequently asked questions

What is the main difference between Langfuse and Braintrust?

Langfuse is open-source with free self-hosting but requires maintaining PostgreSQL, ClickHouse, Redis, and S3 infrastructure, plus Kubernetes for production scale. Braintrust provides a managed platform with zero infrastructure overhead and automatic CI/CD deployment blocking.

Which platform has better evaluation automation?

Braintrust delivers complete evaluation automation with deployment blocking built in. CI/CD pipelines execute scorers automatically, analyze statistical significance, and block merges when quality degrades. Langfuse provides LLM-as-a-Judge evaluations and custom scorer primitives, but teams assemble the orchestration layer themselves for comprehensive regression testing. Braintrust packages this automation ready to use immediately.

Which Langfuse alternative works best for teams already using LangChain?

LangSmith offers zero-config tracing for LangChain applications through automatic instrumentation. However, the deep integration creates vendor lock-in and per-trace pricing scales exponentially with traffic volume. Braintrust integrates with LangChain through SDKs without framework coupling and adds deployment blocking and proxy mode flexibility. Choose LangSmith when framework coupling is acceptable, and Braintrust for framework-agnostic workflows with managed infrastructure.