How Retool uses Loop to turn logs into AI roadmap decisions

With Allen Kleiner, AI Engineering Lead

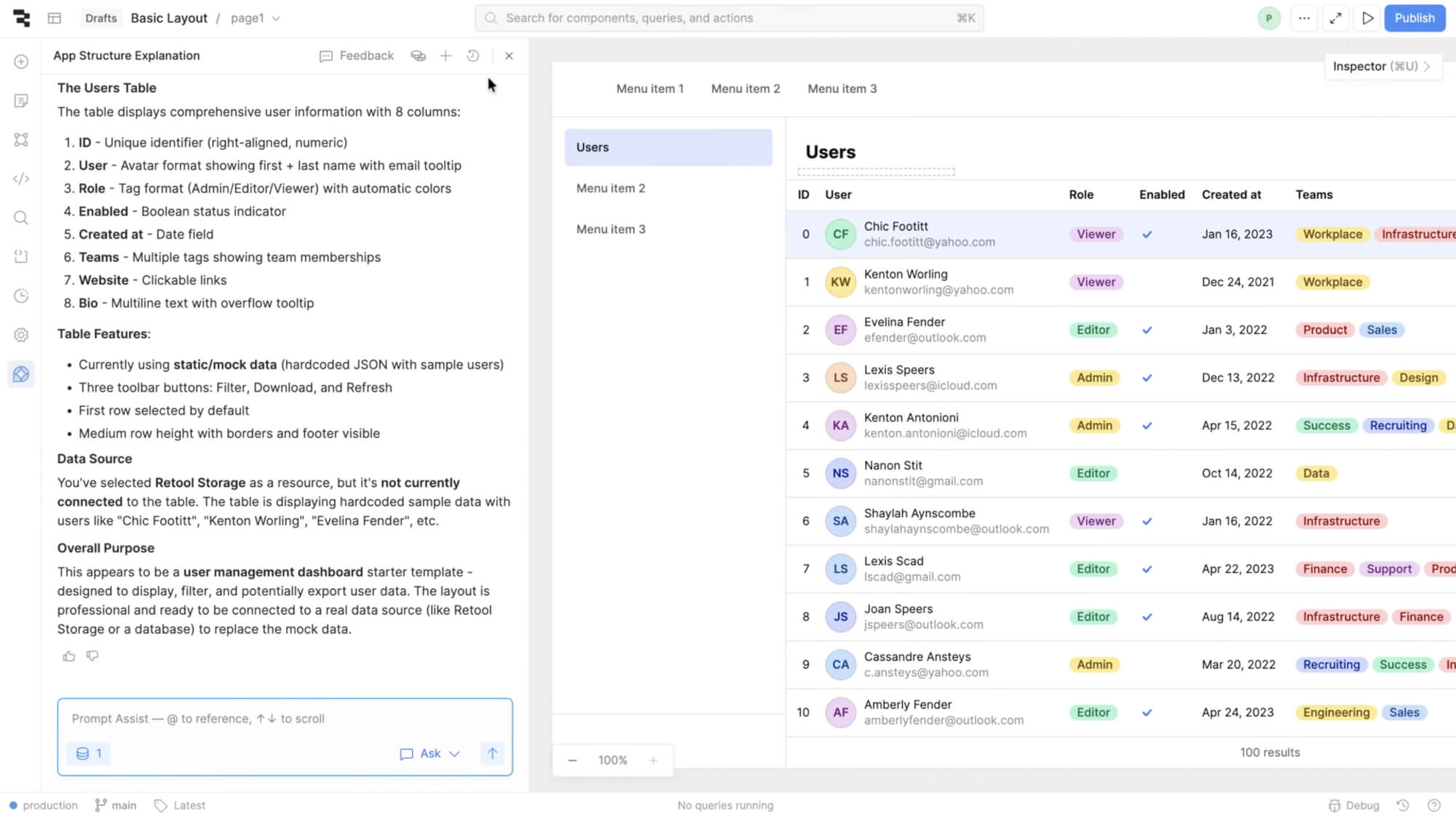

Retool is an enterprise AppGen platform powering how the world's most innovative companies build the internal tools that run their business. Over 10,000 companies rely on Retool to create custom admin panels, dashboards, and workflows. When Retool launched Assist, their AI-powered development assistant, they faced a familiar challenge: with countless potential improvements, how do you decide what to build next?

Rather than relying on intuition or roadmap planning sessions, Retool's team built a data-driven workflow where production logs directly inform their prioritization decisions. Using Loop, Braintrust's AI assistant, they query production data semantically to surface insights in seconds, turning observability into actionable roadmap decisions.

From vibes to data

Before implementing a structured evaluation system, Retool's approach to quality assurance was hands-on and collaborative. This worked well for the early stages of development.

The team would gather for dog-fooding sessions, manually test prompts, collect failure modes into tickets, and tackle issues throughout the week. This approach gave them invaluable qualitative insights and helped them deeply understand user needs.

As Assist scaled toward general availability, the team saw an opportunity to evolve their process. They wanted to quantify improvements, gain visibility into which features resonated most with users, and build a more systematic way to prioritize their growing roadmap. This evolution would let them maintain the same commitment to quality while serving exponentially more users.

Production logs as a product roadmap

Retool's current approach treats production data as the primary input for roadmap decisions. Here's how it works:

1. Classify user intent with a front-of-house agent

Every query to Assist first passes through a classifier agent that categorizes what users are trying to do. The classifier outputs categories like:

adding-pages: Adding new pages to multi-page appsapp-readme: Generating or updating app documentationworkflows: Building automation workflowsperformance-queries: Optimizing app speedrenaming-pages: Page management tasksplugin-scope: Working with third-party integrations

By analyzing the distribution of categories in production traffic, the team gains immediate visibility into what users actually want Assist to do, not what they assumed users would want.

2. Query production logs with BTQL

The team built custom dashboards using BTQL (Braintrust Query Language) to surface actionable insights from production data. One critical metric they track is "blast radius" (error rate multiplied by usage volume), which helps the team prioritize fixes based on actual user impact rather than just error frequency. A tool with a 50% error rate but only 10 calls per week gets lower priority than one with a 10% error rate and 10,000 calls.

3. Monitor critical issues through dashboards

Retool's on-call rotation centers around internal dashboards that aggregate production metrics. The team tracks:

- Week-over-week trends in tool call success rates

- Context window overflow incidents

- Model-level errors (API failures, rate limits, quota issues)

- Performance metrics (latency, token usage)

When the dashboards surfaced that context window overflow was affecting a significant number of requests, the team used Loop to analyze the severity and patterns. Loop's analysis confirmed context window overflow was the top-level issue, helping them quickly differentiate it from lower-priority problems like model provider rate limits. "This allowed us to shuffle some priorities, go and address that specific pointed problem, take that on as a project, and monitor its success afterwards," explains Allen Kleiner, AI Engineering Lead at Retool.

4. Use Loop to understand production data faster

Loop helps the team understand production data faster by querying logs semantically and surfacing insights in seconds.

Loop was our way of getting data or synthesizing log data more efficiently at an aggregate level. We use it to find common error patterns every single week.

When investigating failures, Allen starts with Loop's pre-canned prompts to get an overview, then digs deeper into specific issues. For example, when examining context window problems, Loop surfaced the distribution of errors: context length exceeded, credit quota exhausted, API overloaded errors, and more.

One team member exclusively uses Loop for writing BTQL queries. While the team had built their own internal Retool agent to issue BTQL queries, the advantage became clear:

Y'all have all of the context and all the data. I was really happy with my experience.

The team relies on Loop to answer questions they ask themselves daily about production behavior, turning weeks of manual log analysis into minutes of semantic queries.

Results: Data-driven roadmap decisions

This AI observability-first approach has fundamentally changed how Retool builds.

Discovered multi-page support was the #1 gap

The classifier revealed that adding pages to multi-page apps was the most frequently requested capability that Assist couldn't handle.

We knew that we would allow users to build single pages at a time for GA, but this allowed us to priotize adding a new page, since we knew for sure it was the thing that a lot of our customers wanted to do.

Armed with this data, the team:

- Made multi-page support a top priority

- Created focused datasets from real multi-page queries in production

- Built specialized scorers to validate multi-page generation quality

- Shipped the feature with confidence it would address real user needs

Identified quick wins through usage patterns

The same classifier analysis revealed that app README functionality ranked third in user requests. The team had recently built AI-powered README generation as a standalone feature, but hadn't given Assist the tools to access it.

This was such a low-lift way to knock out our third most requested thing that we couldn't do.

Within days, they integrated README read/write capabilities, addressing a significant user need with minimal engineering effort. This was a win they would have missed without production data visibility.

Improved classifier accuracy by 25%

Through iterative evaluation using Braintrust datasets and scoring functions, the team drove the front-of-house classifier from 72% to 95% accuracy. This improvement came from:

- Analyzing misclassified queries in production logs

- Creating targeted test datasets for problematic categories

- Running evals on every change to prevent regressions

- Using real production distributions to weight test cases appropriately

Built institutional knowledge through scoring functions

The team maintains both general and capability-specific scoring functions. A generic tool_call_success scorer evaluates whether tool calls complete successfully, while specialized scorers like is_app_scope_correct validate specific functionality (in this case, checking that multi-page apps correctly scope dependencies between pages).

This approach lets them track both overall quality trends and the health of specific features, creating a detailed quality map of their entire system.

Key takeaways: Observability as a roadmap tool

Retool's experience demonstrates that production observability can be a product development tool in addition to a debugging tool. Their approach offers several lessons for teams building AI features:

1. Instrument user intent, not just outputs

Most teams log inputs and outputs, but Retool goes further by explicitly classifying what users are trying to accomplish. This creates a direct mapping between user needs and engineering priorities.

2. Prioritize by impact, not just frequency

Retool's "blast radius" metric (error rate × volume) ensures the team works on issues that affect the most users, not just the most frequent errors. A rare bug that affects thousands of requests might matter more than a common bug that affects dozens.

3. Make production data accessible to the whole team

By using Loop to query production data semantically and building dashboards on top of logs, Retool ensures that production insights inform everyone's decisions, not just the on-call engineer. Loop democratizes access to production insights. Team members can ask natural language questions and get immediate answers without writing complex queries. The entire team sees the same data, creating shared understanding of quality and priorities.

4. Close the loop from logs to datasets to evals

Production failures become test cases. Retool maintains a workflow where problematic traces get curated into datasets, datasets drive scoring function development, and scoring functions run on every change. This creates a continuous improvement cycle where the system is tested against exactly the scenarios where it previously failed.

Conclusion

Without Braintrust, we couldn't do any of this. It's critical for what we do and how we do it.

Retool's workflow demonstrates that effective AI development requires systematic observability and the discipline to let data drive decisions. By using Loop to query production data semantically and treating logs as their primary source of roadmap insights, Retool has built a process that surfaces the highest-impact work, prevents regressions, and ensures engineering effort aligns with actual user needs.

Loop turns their production data into better AI, helping them understand production behavior faster and improve continuously through data-driven iteration.

Turn production data into better AI

Want to build a data-driven workflow for your AI features? Learn how Braintrust's observability and evaluation tools can help.

Read more customer stories

How Fintool generates millions of financial insights

“With Braintrust, our science fiction writer can sit down, see something he doesn't like, test against it very quickly, and deploy his change to production. That's pretty remarkable.”