7 best prompt management tools in 2026 (tested and compared)

- Best overall (editing, versioning, evaluation integration, environment deployment): Braintrust

- Best no-code prompt editor: PromptLayer

- Best for LangChain/LangGraph frameworks: LangSmith

- Best for visual workflow building: Vellum

- Best for branching and merging prompts: PromptHub

- Best for existing W&B users: W&B Weave

- Best for CLI-based testing and red teaming: Promptfoo

Without a dedicated prompt management system like Braintrust, teams store prompts in code but coordinate changes through Notion docs, Slack threads, or code comments, which creates chaos when an AI feature breaks in production. Engineers spend hours comparing text files to identify changes because no one knows which prompt version is running.

Prompt management tools bring order to this chaos by tracking changes, connecting prompt updates to test results, and providing teams with a shared workspace for iteration. Teams using dedicated prompt management tools ship faster and catch regressions before users notice them. In this article, we'll overview leading prompt management tools.

What is prompt management?

Prompt management refers to the systems and practices teams use to version, organize, test, and deploy prompts across different environments. Prompt management treats prompts as configurable assets that can be updated, rolled back, and monitored independently of software releases.

Prompt management platforms help teams track every change to their prompts, control which versions run in different environments, and collaborate without overwriting each other's work. Most platforms include:

- Version control and history: Every prompt change is saved with a unique identifier. Teams can compare versions, see what changed, and revert to previous versions when needed.

- Environment management: Prompts move through separate environments (development, staging, production) before reaching users. This prevents untested changes from affecting live applications.

- Collaborative editing: Product managers, engineers, and subject matter experts work on prompts in a shared interface. Changes are tracked by author and timestamp.

The 7 best prompt management tools in 2026

1. Braintrust

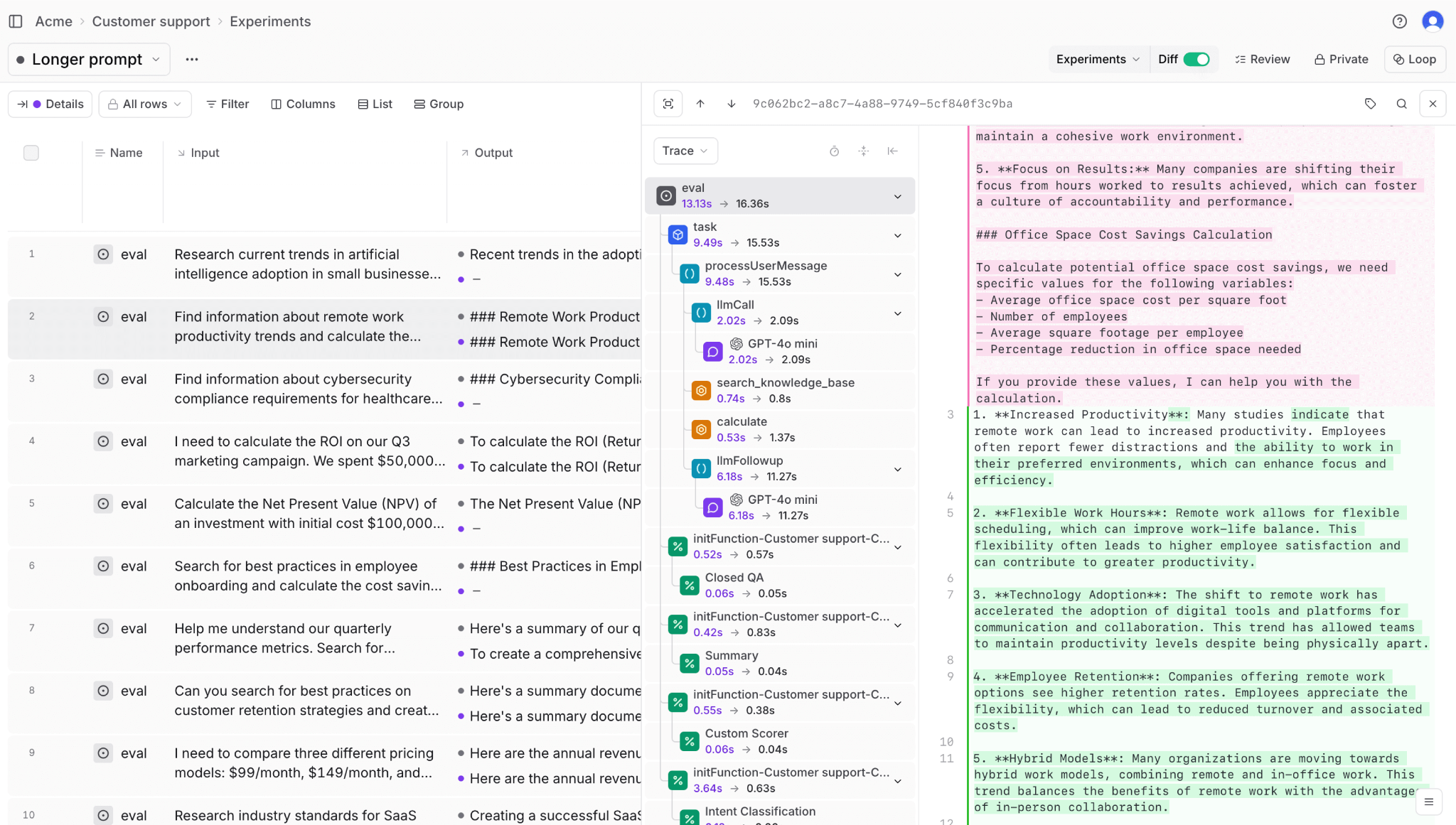

Braintrust lets you version prompts, test them against real data, and deploy them across environments from one platform. Loop, Braintrust's AI co-pilot, enables non-technical teams to iterate on prompts through natural language instructions to automatically generate test datasets, run evaluations, and optimize prompts without writing code.

Braintrust's environment-based deployment separates it from competitors. Braintrust allows setting up separate environments for development, staging, and production. Prompts move through each environment only after passing defined quality gates. A prompt that fails evaluation in staging never reaches production automatically.

The prompt playground tests prompts on real data, swaps models, and compares outputs side by side. Engineers update prompts directly in the application code using the SDK, while product managers review and refine those same prompts within the playground. Prompt edits sync bidirectionally, so neither workflow blocks the other.

Braintrust's GitHub Action runs evaluations whenever a prompt changes in a pull request. This GitHub integration ensures prompt updates follow the same review and validation process as code changes. Once prompts reach production, Braintrust tracks which version is serving live traffic. If a prompt starts producing lower-quality outputs, the Braintrust dashboard surfaces the quality drop alongside the specific prompt version responsible.

Best for

Product teams that need to iterate quickly on prompts with robust evaluations to ensure changes improve accuracy.

Pros

- Loop automates dataset creation, scorer generation, and prompt optimization from natural language instructions

- Playground enables side-by-side model comparison, parameter tuning, and real-time team collaboration in one interface

- Prompt slugs let users configure prompts in the Braintrust app and reference them directly in code

- Production failure automatically becomes a quality check parameter that prevents repeating the same error

- Built-in dataset management links test cases directly to prompt versions for systematic quality tracking

- Prompt variables support complex templating with type validation and auto-complete in the editor

- Export/import functionality enables prompt portability across environments and backup workflows

Cons

- Best results require adopting structured evaluation practices alongside versioning

- Learning curve for teams new to systematic prompt testing and quality measurement

Pricing

Free tier with 1M trace spans and unlimited users. Pro plan at $249/month. Enterprise pricing available on request.

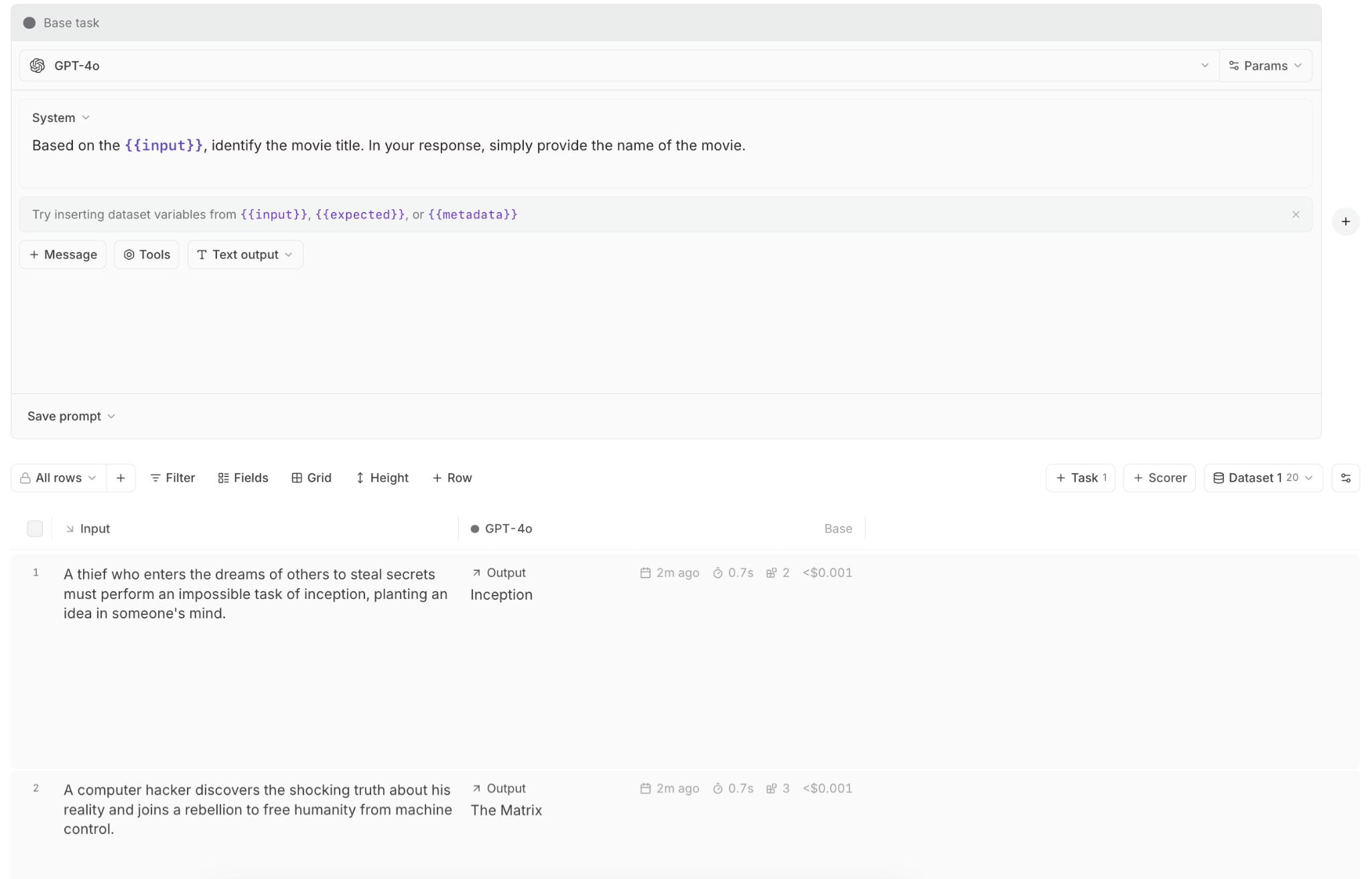

2. PromptLayer

PromptLayer connects your application to LLM providers, logs all requests, and provides a visual workspace for managing prompt versions and deployments. It's built for non-technical teams, allowing anyone to edit prompts, test variations, and push changes live without writing code or waiting on engineering resources.

Best for

When non-technical staff need to update and deploy prompts through a visual interface.

Pros

- Visual editor requires no code for prompt creation and versioning

- Jinja2 and f-string templating for dynamic prompts

- Built-in A/B testing with traffic splitting

- Automatic logging captures all LLM requests with metadata

- One-click model switching across OpenAI, Anthropic, and others

Cons

- CI/CD integration requires manual setup compared to platforms with native pipeline automation

- The evaluation framework is less comprehensive than dedicated testing platforms

- Acts as a proxy layer that may introduce latency for time-sensitive applications

- Limited native support for complex multi-step agent workflow orchestration

Pricing

Free tier with 10 prompts and 2,500 requests/month. Paid plan starts at $49/month. Enterprise pricing with self-hosting available on request.

3. LangSmith

LangSmith provides prompt versioning and a prompt playground for teams building with LangChain or LangGraph. Prompts stored in LangSmith Hub load directly into your LangChain code, and LangSmith tracks every version with full change history. The playground supports prompt testing, cross-model output comparison, and automated or manual evaluation reviews.

Best for

LangChain or LangGraph users who want prompt management integrated with their existing framework.

Pros

- Native LangChain/LangGraph integration loads prompts directly from LangSmith Hub

- Full-stack tracing captures inputs, outputs, tool calls, and decision steps

- Evaluation framework supports automated and human review

- Prompt Hub and Playground for versioning and experimentation

- Production dashboards track latency, errors, and token usage

Cons

- Strongly tied to LangChain, limiting flexibility outside that ecosystem

- Versioning and environment management features are weaker than core observability features

- Usage-based pricing scales quickly with trace volume, making costs unpredictable at scale

Pricing

Free tier with 5,000 traces/month. Paid plan starts at $39 per user/month. Enterprise pricing with self-hosting available on request.

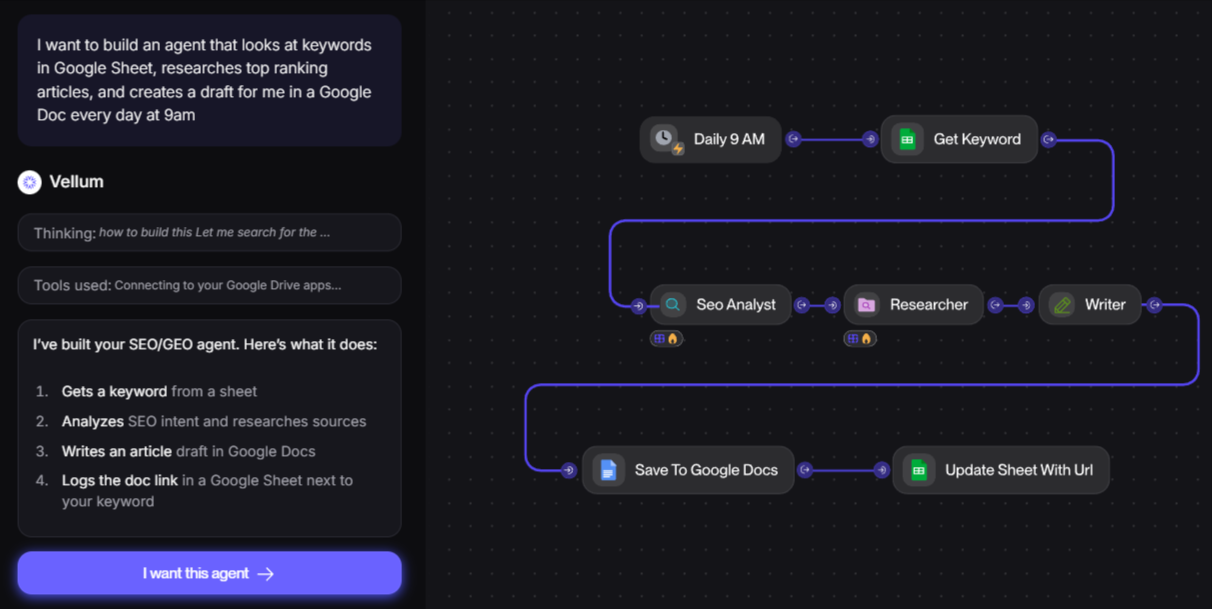

4. Vellum

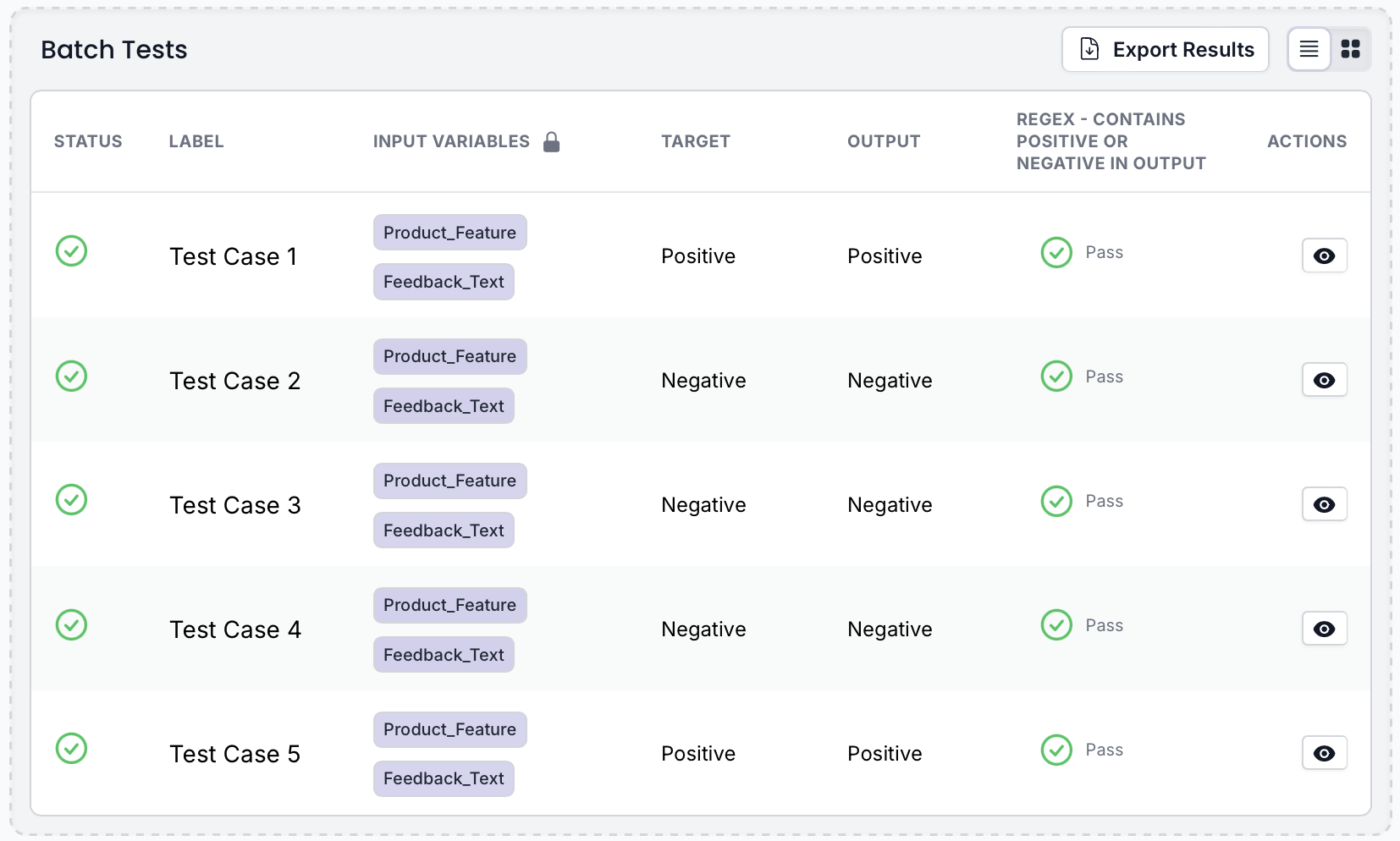

Vellum provides a visual prompt playground for testing and deploying prompts across multiple models and providers. Teams can compare different prompt-model combinations side-by-side, evaluate outputs against test cases, and deploy prompt changes without code modifications. Vellum includes workflow orchestration tools that let users build multi-step AI logic through a visual interface, along with evaluation capabilities.

Best for

Teams developing AI workflows where both technical and non-technical users need to collaborate.

Pros

- Visual workflow builder allows non-technical teams to create AI logic without code

- Built-in RAG infrastructure for uploading and querying unstructured data

- SDK support for Python and JavaScript alongside visual tools

- Staging environments for safe iteration before production deployment

Cons

- Workflow-first design limits visibility outside the visual graph

- Evaluation features aren't flexible and closely tied to Vellum-managed workflows

- Limited depth for analyzing agent reasoning beyond execution steps

Pricing

Free tier with 30 credits and one concurrent workflow per month. Paid plan starts at $25 with 100 credits per month. Custom enterprise pricing.

5. PromptHub

PromptHub provides Git-style version control for prompts, letting teams branch, commit, and merge prompt changes the same way they manage code. PromptHub includes a REST API to retrieve prompts at runtime, CI/CD guardrails that block deployments of low-quality prompts, and prompt chaining for multi-step workflows.

Best for

Organizations that want to manage prompts with Git-style branching, merging, and pull request workflows.

Pros

- Git-based versioning with branching, commits, and merge workflows

- Prompt chaining for multi-step reasoning pipelines

- CI/CD guardrails block secrets, profanity, and regressions before deployment

- REST API retrieves prompts with variable injection

- Community library for discovering and sharing prompts

Cons

- Evaluation features are more basic than dedicated testing platforms

- Versioning is Git-style but lacks deeper production lifecycle controls

- Limited support for environment-based deployment workflows

- Less suitable for teams needing advanced observability or CI-driven checks

Pricing

Free tier with unlimited seats, no private prompts, and limited API access. Paid plans start at $12/user/month. Enterprise pricing available on request.

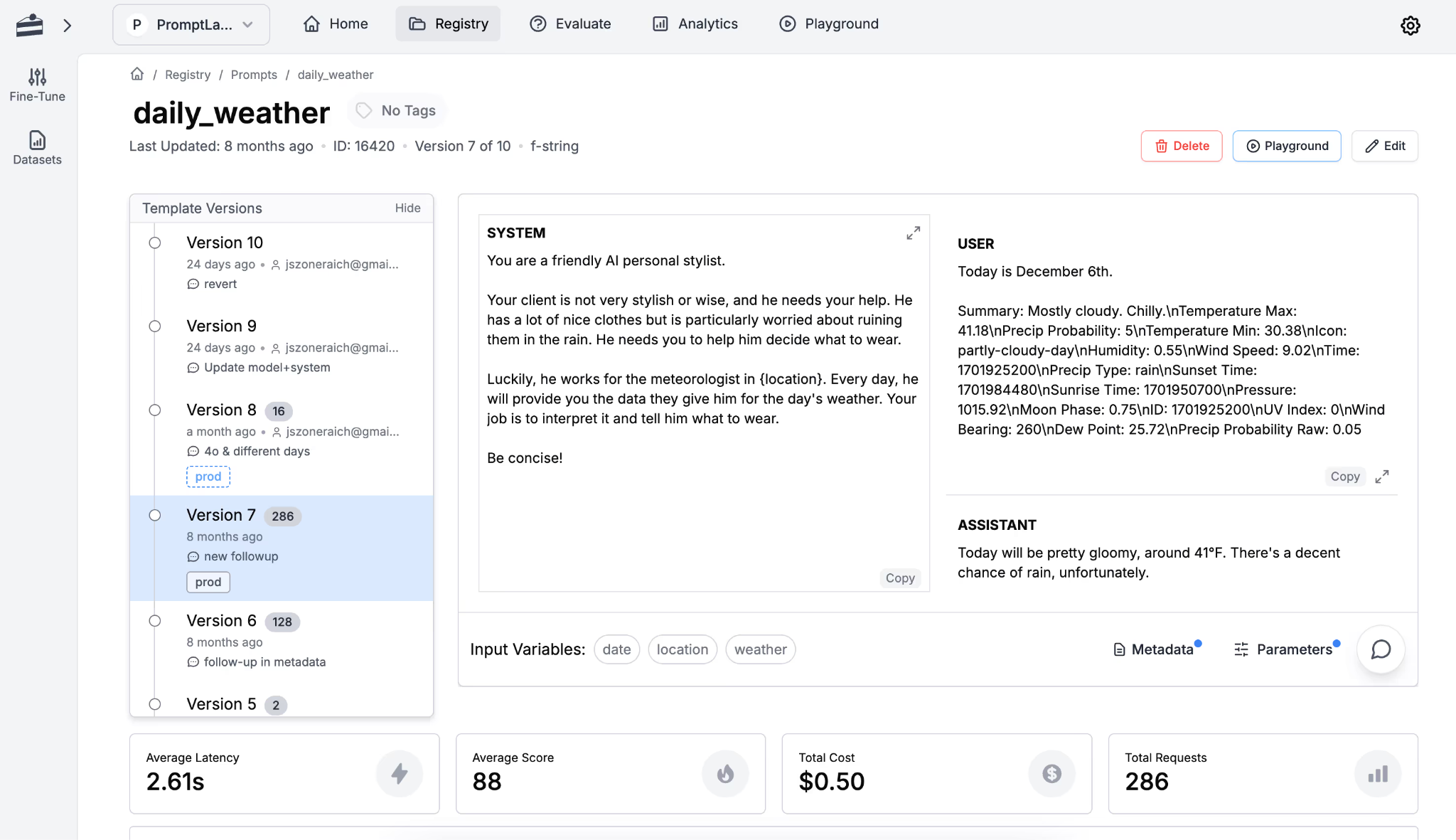

6. Weights & Biases (W&B Weave)

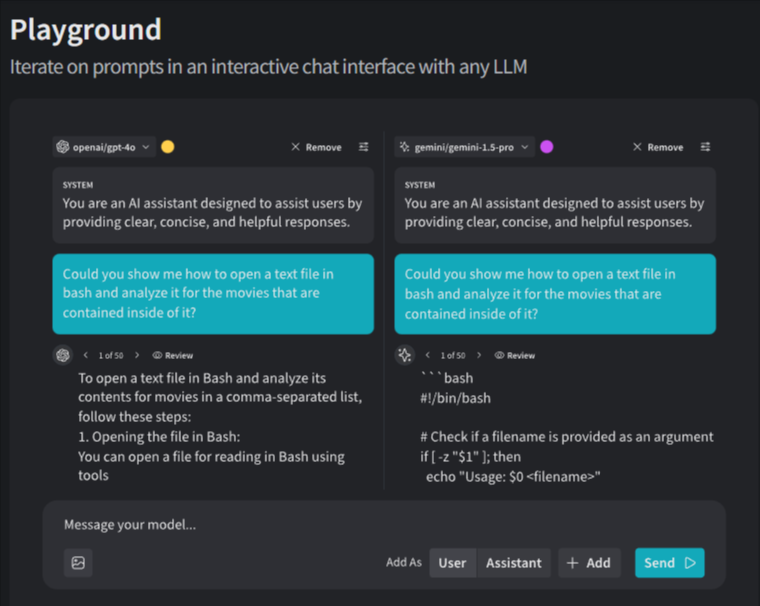

W&B Weave adds prompt management to the Weights & Biases ML experiment platform. You can iterate on prompts in an interactive playground, compare outputs across different models, and track prompt performance through evaluation leaderboards. Weave integrates with your existing W&B setup, so teams already using Weights & Biases for ML experiments can manage prompts without adding another tool.

Best for

Existing Weights & Biases users for ML experiments who want to add prompt management to their existing setup.

Pros

- One-line instrumentation with @weave.op decorator

- Interactive playground for prompt iteration

- Evaluation leaderboards compare prompt/model combinations

- Guardrails detect harmful outputs and prompt attacks

Cons

- Prompt-specific workflows (environment deployment, PM collaboration) are less developed than dedicated tools

- Learning curve if you're unfamiliar with W&B concepts like runs and artifacts

- Production deployment features require custom solutions

Pricing

Free tier with limited seats, storage, and ingestion. Paid plans start at $60 per month. Enterprise pricing available on request.

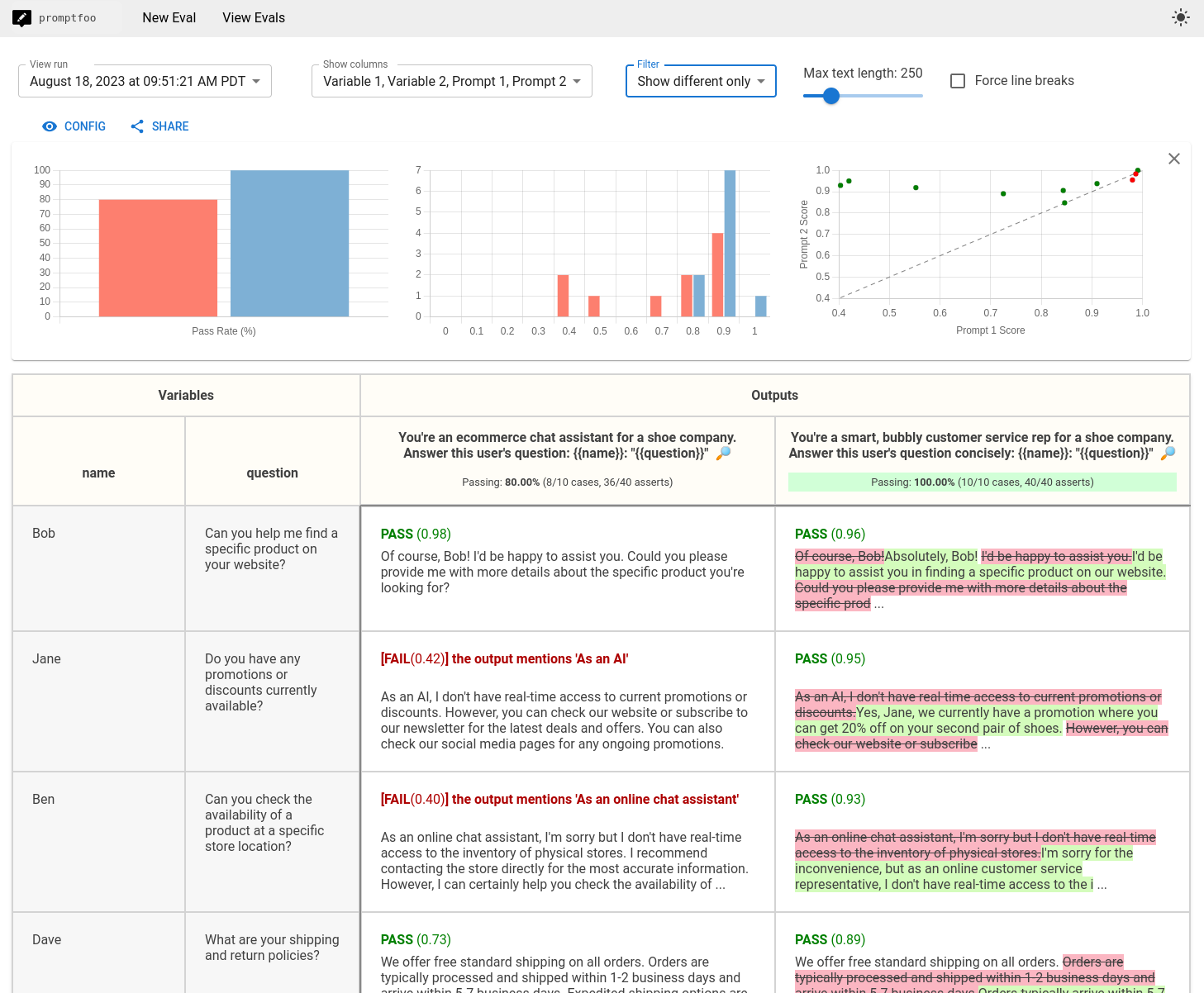

7. Promptfoo

Promptfoo is an open-source command-line tool for evaluating and testing LLM prompts and applications. Prompts and test cases are defined in configuration files such as YAML that live in the code repository, allowing teams to run batch evaluations across different models and prompt variations. Promptfoo also includes built-in security scanning to detect issues like prompt injection, PII exposure, and jailbreak risks.

Best for

Teams that manage prompts through command-line tools and operate in regulated industries where vulnerability scanning is essential.

Pros

- Fully open-source with no feature restrictions

- Declarative YAML/JSON configs version in Git

- Built-in red teaming: PII leaks, prompt injections, jailbreaks, toxic outputs

- Native CI/CD integration across major platforms

Cons

- Requires technical expertise: YAML configs, CLI, JavaScript/Python for advanced use

- No pre-built test scenarios require teams to create all test cases manually

- Self-hosting requires infrastructure management

Pricing

Free tier with unlimited open-source use and up to 10k red-team probes per month. Enterprise pricing is customized based on team size and needs.

Best prompt management tools compared

| Tool | Starting Price | Best For | Notable Strength |

|---|---|---|---|

| Braintrust | Free (Pro: $249/month) | Teams running prompts in production who care about output quality and user experience | Evaluation-first prompt management with environment deployment, Loop for no-code prompt optimization, CI checks, and production monitoring |

| PromptLayer | Free (Pro: $49/month) | Non-technical teams editing and deploying prompts | No-code visual editor with A/B testing and model switching |

| LangSmith | Free (Plus: $39/user/month) | LangChain and LangGraph teams | Deep native integration with LangChain and full-stack tracing |

| Vellum | Free (Pro: $25/month) | Teams building AI workflows with visual tools | Side-by-side model comparison with no-code workflow orchestration |

| PromptHub | Free (Paid from $12/user/month) | Teams that want Git-style prompt workflows | Branching, merging, and CI guardrails for prompts |

| W&B Weave | Free (Paid from $60/month) | Existing Weights & Biases users | Extends ML experiment tracking to LLM prompts |

| Promptfoo | Free open source (Custom enterprise pricing) | CLI-driven teams in regulated environments | Batch testing and red-team security scanning |

Protect user experience with every prompt change. Start free with Braintrust.

Why Braintrust is the best prompt management tool

Most prompt management tools focus on versioning. They store prompts, track edits, and allow rollbacks when something breaks. That helps teams see what changed, but it does not show whether those changes improved results.

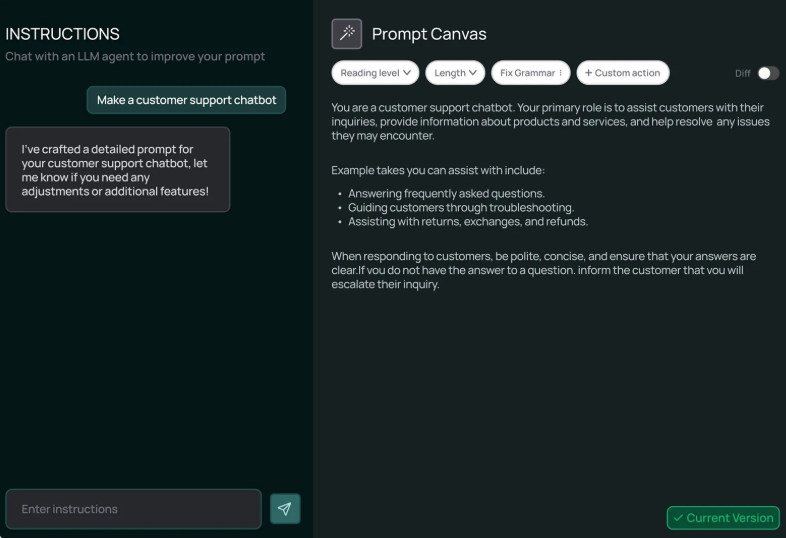

Braintrust connects versioning directly to quality measurement. Loop enables product teams to iterate on prompts through natural language, automatically generating test datasets and running evaluations. Every prompt update is evaluated against real test data, so teams can see whether output quality improves or degrades before changes reach users. Once deployed, Braintrust monitors live traffic and surfaces quality drops as they happen. Versioning, testing, and production monitoring all live in one system, making it easy to trace any issue back to the exact prompt change that caused it.

This level of control prevents revenue loss and compliance violations when AI quality directly affects customer trust. Version history alone cannot trace production failures back to specific prompt changes that caused them.

Teams at Notion, Stripe, Zapier, and Vercel use Braintrust to manage prompts in production. Notion went from catching 3 issues per day to 30 after adopting Braintrust and shipping changes with more confidence.

Start with Braintrust's free tier to see how it can help your team ship prompt changes safely and protect user experience before issues reach production.

Prompt management FAQs

What is prompt management?

Prompt management is the practice of treating prompts as production assets. It includes versioning, testing, and controlled deployment so teams can track changes, validate quality, and understand how prompts behave in real applications before and after release.

How do I choose the right prompt management tool?

Start with your workflow needs. If measuring prompt quality through evaluation is critical, choose a platform that ties versioning directly to testing, such as Braintrust. If non-technical teams need to edit prompts independently, no-code tools like PromptLayer are a good fit. LangChain-heavy teams benefit from native tooling such as LangSmith, while teams with strict data residency or CLI-driven workflows may prefer open-source options such as Promptfoo.

Which products help me optimize prompts with AI?

Braintrust's AI assistant, Loop, automatically optimizes prompts. Loop generates test datasets, runs evaluations, and iterates on prompts based on natural language instructions, enabling product teams to improve prompt quality without manual testing. Loop's conversational approach to optimization is unique in combining dataset generation, evaluation, and iteration in one AI-powered workflow.

What are the best alternatives to PromptLayer?

Braintrust is a strong alternative for teams that need testing and quality validation in addition to collaboration. PromptLayer works well for no-code prompt editing, but its evaluation capabilities are more limited. Braintrust offers similar collaboration features while adding environment-based deployment, automated evaluation, and production monitoring, making it suitable for teams that need testing, deployment controls, and production monitoring alongside collaboration features.