- Prompts: LLM prompts with model configuration and templating (see Deploy prompts)

- Tools: General-purpose code that LLMs can invoke to perform operations or access external data

- Scorers: Functions for evaluating LLM output quality (returning a number from 0 to 1)

- Workflows: Chains of two or more prompts for multi-step workflows

Security: For Braintrust-hosted deployments and self-hosted deployments on AWS, run in isolated AWS Lambda environments within a dedicated VPC that has no access to internal infrastructure. See code execution security for details.

Composability

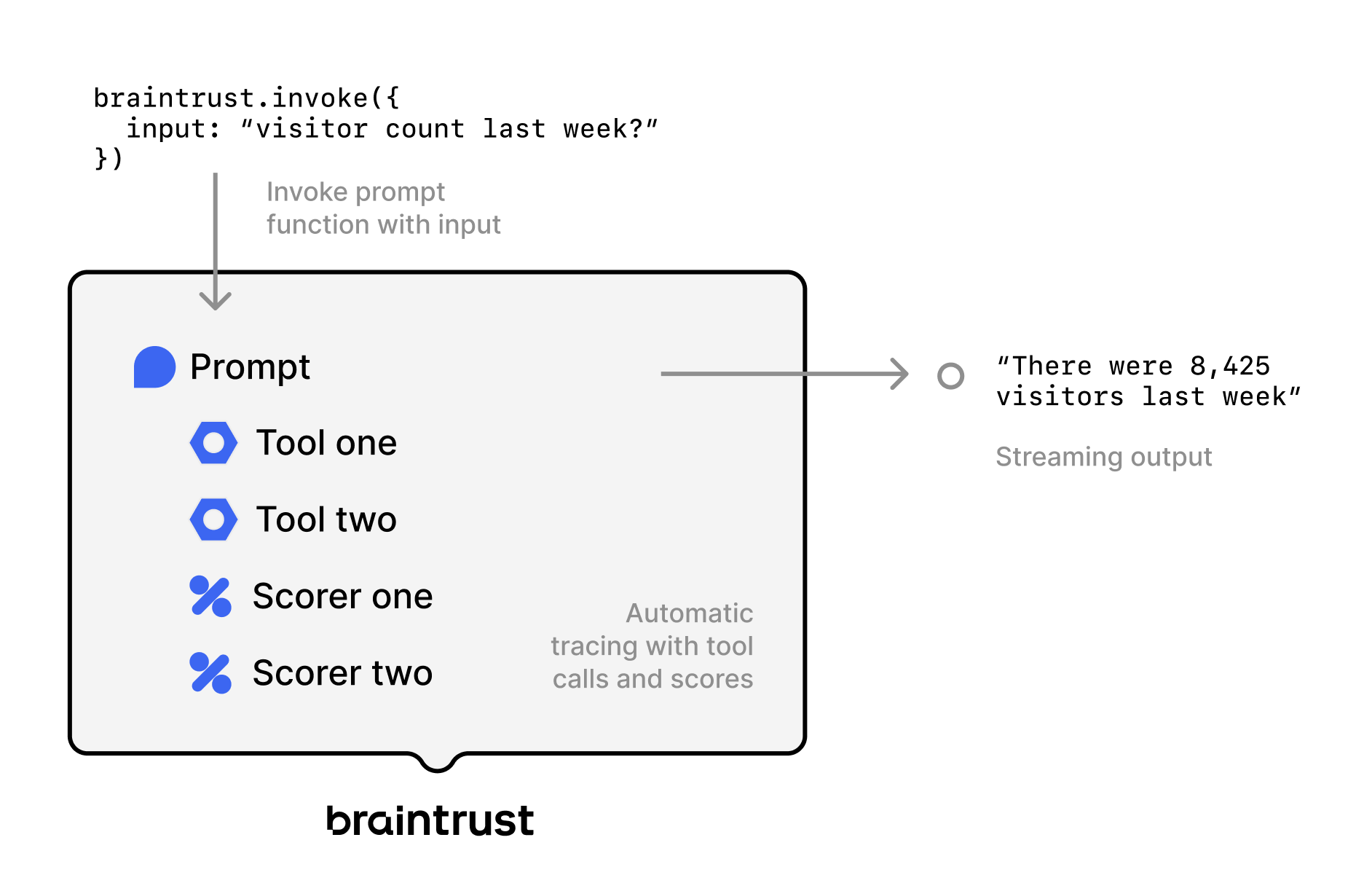

Functions can be composed together to produce sophisticated applications without complex orchestration logic. In this diagram, a prompt is being invoked with an input and calls two different tools and scorers to ultimately produce a streaming output. Out of the box, you also get automatic tracing, including the tool calls and scores.

Any function can be used as a tool. For example, a RAG agent can be defined as just two components:

In this diagram, a prompt is being invoked with an input and calls two different tools and scorers to ultimately produce a streaming output. Out of the box, you also get automatic tracing, including the tool calls and scores.

Any function can be used as a tool. For example, a RAG agent can be defined as just two components:

- A vector search tool that embeds a query, searches for relevant documents, and returns them

- A system prompt with instructions for how to retrieve content and synthesize answers using the tool

Deploy tools

Tools are functions that LLMs can call to perform complex operations or access external data. Create tools in code and push them to Braintrust:- TypeScript

- Python

calculator.ts

View and manage tools

Go to Tools to view all deployed tools in your project. Use Filter or the search bar to find specific tools. Click a tool to view its code. To test the tool, enterinput data and click Test.

Add tools to prompts

Once deployed, you can add tools to prompts in the UI or via code. See Add tools for more details.Call tools directly

Call tools via the API without going through a prompt:Deploy scorers

Scorers evaluate the quality of LLM outputs. See Write scorers for details on creating scorers in the UI or via code.Deploy workflows

Workflows chain multiple prompts together into workflows. Create workflows in playgrounds:- Navigate to Playgrounds

- Click + Workflow

- Add prompt nodes by selecting + in the comparison pane

- Use template variables to pass data between prompts:

{{dataset.input}}- Access dataset inputs{{input}}- Access previous prompt’s output{{input.field}}- Access structured output fields

- Save the agent

Invoke functions

Functions can be invoked through the REST API, SDK, or UI. When invoking a function, you can reference it by:- Slug: The unique identifier within a project for any function type (e.g.,

slug: "calculator") - Global function name: Built-in Braintrust scorers only - globally unique functions like

Factualityfrom autoevals

- Slug

- Global function name

Reference a function by its slug within a specific project:

Handle dependencies

Braintrust automatically bundles dependencies for your functions:- TypeScript: Uses

esbuildto bundle code and dependencies (excludes native libraries like SQLite) - Python: Uses

uvto cross-bundle dependencies to Linux (supports most binary dependencies)

Version functions

Like prompts, functions are automatically versioned. Pin specific versions in code:Use the REST API

Call any function via HTTP:Function features

All functions in Braintrust support:- Well-defined parameters and return types: Type-safe interfaces using Zod (TypeScript) or Pydantic (Python)

- Streaming and non-streaming invocation: Handle real-time and batch operations

- Automatic tracing and logging: All function calls are traced in Braintrust

- OpenAI argument format: Prompts can be loaded directly in OpenAI-compatible format

- Version control: Functions are automatically versioned with each deployment

Organize functions

Functions are organized into projects using theprojects.create() method. This method returns a handle to the project (creating it if it doesn’t exist) that you can use to create tools, prompts, and scorers:

If a project already exists,

projects.create() returns a handle to it. There is no separate .get() method.Next steps

- Write scorers to create custom evaluation functions

- Deploy prompts that use your tools

- Monitor deployments to track function performance

- Manage environments to version functions across environments